UI Design Blinks 2012

By Gerd Waloszek

Welcome

to this column of brief, blog-like articles about various UI design topics – inspired

by my daily work, conference visits, books, or just everyday life experiences.

Welcome

to this column of brief, blog-like articles about various UI design topics – inspired

by my daily work, conference visits, books, or just everyday life experiences.

As in a blog roll, the articles are listed in reverse chronological order.

See also the overviews of UI Design Blinks from others years: 2010, 2011, 2013.

December 12, 2012: Meeting the Drum Again...

This October, I revived an old design proposal of mine, and yesterday I inadvertently came across the implementation of something similar. "Am I too backwards-oriented, or was I sometimes ahead of my time?" I asked myself. Anyway, after having recovered from my surprise, I decided to write a UI Design Blink about my new discovery.

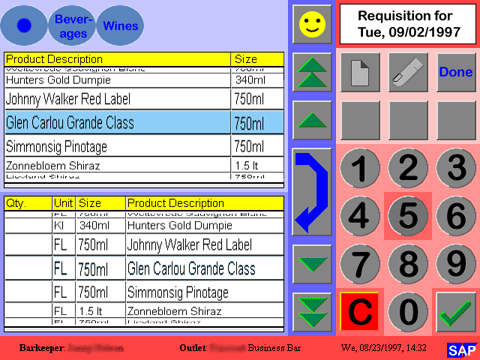

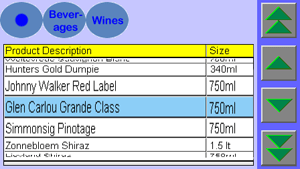

Throughout 1997 I was involved in an SAP project exploring touchscreen applications for hotels and restaurants. In this "hotel project", I built HTML prototypes that were based on a 10x7 grid, with grid cells of 64x64 pixels, plus a bottom row for information, which resulted in a screen size of 640x480 pixels (VGA screen). The grid cells correspond to a touch target size of 2.2 cm (at 72 dpi) or 1.67 cm (at 96 dpi), which is much larger than today's recommendations (Rachel Hinman, for example, recommends 1 cm). The screens (which one developers called "idiot screens" at the time) were designed to be used exclusively with fingers. "Tap" (or "point") was the only gesture available; "swipe" and other gestures were discouraged or unknown back then. Therefore, some design elements were implemented differently than one would do today. Below is a sample screen of the prototype (Figure 1). I have to admit that I blush, when I see the colors. At that time we used web-safe colors, but a visual designer would have done much better even back then. My static prototype was therefore polished by a graphic designer in Palo Alto and transferred into a dynamic JAVA version. I am still trying to find out whether the developers really implemented the feature that I want to report on here.

Figure 1: Static HTML prototype of a touchscreen application for hotels (around 1997; click image for larger versions)

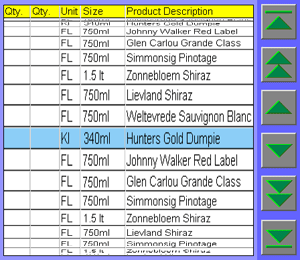

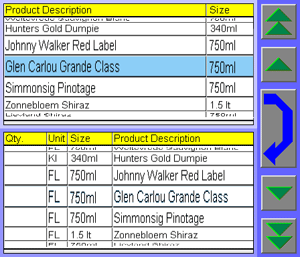

So much for the opening speech. Now I would like to draw your attention to the two lists on the left-hand side of the prototype. Like in the old Apple "font mover pattern," you can move items from one list to the other and back again. I called the two lists "drums" when I invented them (there may have been "parallel" inventions I didn't know about). Drums are a special list variant, in which the list looks like it is glued to a cylinder or drum (like in gambling machines). Because of the resulting 3D effect and the "blown up" middle row, they are better suited to selecting items by tapping. I conceived the list items to be arranged in a cyclical fashion, like in a real drum, but I never had a working prototype on hand to verify whether this really makes sense. Drums could also be arranged horizontally so that the columns would scroll.

By the way, one of Adobe Photoshop's filters helped me distort an ordinary list to achieve the 3D-effect of a drum.

What about the buttons to the right of the drums? As I have already mentioned, "swipe" gestures were considered harmful at that time, only "taps" were allowed. Users therefore had to tap buttons to turn the drum (row-wise, page-wise; I also included "go to first item" and "go to last item" buttons for longer lists). The buttons at the top navigate users within a hierarchy of items. I called this mechanism a "stack" at that time (and, together with a drum, a "stack drum"). Today I would call it "breadcrumbs."

I later consolidated my explorations of touchscreens into guidelines (Interaction Design Guide for Touchscreen Applications), a rare beast at that time and, although completely outdated, is still available for historical interest on the SAP Design Guild Website. Here are the drum variants that I included in the guidelines (direct link to lists and drums page):

|

|

|

| Drum | Stack drum | |

|

|

|

| Long drum | Double drum |

Figures 2-5: Variants of the drum touchscreen control (from Interaction Design Guide for Touchscreen Applications on the SAP Design Guild; click images for larger versions)

Last, but not least, I should mention that the prototype above uses a combination of a stack drum and a double drum.

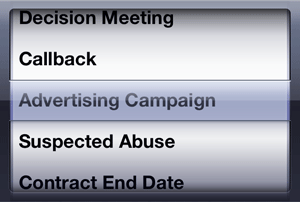

So what surprised me yesterday? It was something that reminded me strongly of my drum design proposal, but it looks much nicer and also works a bit differently because it utilizes swipe gestures: the iPhone's picker, which I found in Luke Wroblewski's book Mobile First (if I were an iPhone user, I would of course have encountered the picker already much earlier). I present two variants of the iOS picker that a colleague provided for me for comparison below:

|

|

|

| Figure 6: iOS picker (click image for whole screen in German) | Figure 7: iOS date picker (click image for whole screen in German) |

All in all, the picker implements the same basic idea of listing items on a turnable cylinder and enlarging the central items for easier picking. However, in the iOS version you can turn the cylinder with a swipe gesture. Apple's date picker is comprised of three to four independent pickers that allow users to synthesize a date. As for the picker itself, Apple writes, "A picker is a generic version of the date and time picker. As with a date and time picker, users spin the wheel (or wheels) of a picker until the value they want appears."

We can see that, after about 15 years, a lot has changed in the world of touchscreens: Designs have been polished to the extreme (or the extremely "natural" – some blame it on "skeuomorphism"), and new gestures add more flexibility and elegance to the interactions. I am itching to redesign my old prototypes for an iPhone or iPad using the new options. But at the moment, I am unable to create even the simplest prototype with Apple's development environment Xcode. It looks as if I'll have to get some practice there, first...

P. S.: I never considered applying for a patent for the drum at that time. In hindsight, perhaps I should have.

December 5, 2012: A Few Books and Links for Familiarizing Oneself with Mobile

Recently, I reviewed Rachel Hinman's book, The Mobile Frontier, and in the course of the review, I came across a couple of books and links that, in my opinion, might help you, too, get a foothold in the new and exciting realm of mobile design. The topics covered comprise getting ready for mobile, responsive (Web) design, HTML5, and CCS3.

|

|

|

|

|

|

Figure 1: My private collection of e-books for mobile that I would like to present here

All the books that I present here are recent e-book purchases. I have read a lot of them already, but am still in the middle of others. A colleague suggested some of these books to me. I came across most of the links presented here while preparing articles. All in all, I hope that my small collection of reading suggestions is helpful for you.

Getting Ready for Mobile

The following two "non-technical" books will give you a jump start into the mobile design field:

- Luke Wroblewski (2011). Mobile First. A Book Apart • ISBN:

978-1-937557-02-7

Description at A Book Apart • Short presentation - Rachel Hinman (2012). The Mobile Frontier: A Guide to Creating

Mobile Experiences. Rosenfeld Media • ISBN: 1-933820-55-1

(Paperback), ISBN: 1-933820-05-5 (Digital editions)

Description at Rosenfeld Media • Short presentation • Review

Hinman's book can be regarded as a call to action for designers to engage in the new field of mobile design, which she characterizes as "the mobile frontier". Wroblewski's book is another call to action, based on the "small" idea, as he calls it, to design Websites and applications for mobile first. While both books have similar intentions, they differ in detail and complement each other well. It's probably a good idea to start with Wroblewski.

Responsive (Web) Design

Originally promoted by Ethan Marcotte, responsive (Web) design comprises a mixture of techniques that allow HTML pages to adapt to different platforms (screen size and orientation; possibly interaction styles): fluid grids, fluid graphics, and HTML5 media queries. Here are some references that illuminate this approach:

- Ethan Marcotte (2011). Responsive Web Design. A Book Apart • ISBN:

978-0-9844425-7-7

Description at A Book Apart • Short presentation

You can find essential parts of the book in the following articles:- Ethan Marcotte: Responsive Web Design: www.alistapart.com/articles/responsive-web-design (A List Apart 306)

- Ethan Marcotte: Fluid Grids: www.alistapart.com/articles/fluidgrids (A List Apart 279)

- Ethan Marcotte: Fluid Images: www.alistapart.com/articles/fluid-images (A List Apart 328)

- Luke Wroblewski: Device Experience & Responsive Design (originally published: Mar 28, 2012): www.uie.com/articles/device_experiences

- Responsive Web Design (Wikipedia) • Responsive Design (Wikipedia, German)

- Responsive (Web) Design (SAP Design Guild)

HTML5 and CCS3

There is currently a debate going on among designers as to whether native apps, Web applications, or mobile-optimized Websites are the better choice when designing for mobile devices. Each of these approaches has its advantages and disadvantages, as my brief overview shows (see the books by Hinman and by Wroblewski for details):

| App Type | Description | Built Using ... | Pro | Con |

| Mobile-optimized Website | Traditional Website that is optimized for mobile consumption (for example, using responsive design) | Standard HTML | Offers universal access, no major redesign necessary | Not "uniquely" mobile |

| Web App | Mobile app that is designed like a native app but accessed through a mobile browser (not downloaded from an app store) | Platform-independent tools (HTML5, jQuery mobile, and so on) | Offers universal access, easier maintenance, better Web access (following links is easier) | Cannot use all the features that a platform offers, not so pretty, user experience not as good as in native apps, cannot reside on home screen, not in app store (limited discoverability) |

| Native App | Custom-made application, typically downloaded from an app store | Platform-specific tools | Can use all the features that a platform offers (address book, GPS, camera, audio input and other sensors, NFS, and so on), prettier, better user experience (smoother transitions) | Platform-specific, audience limited to platform |

Both Hinman and Wroblewski recommend designing both native and Web apps. Luckily, if you are opting for mobile web apps, you can build on what you already know about Web design and development. But you probably still need to familiarize yourself with HTML5 and CSS3. Here are three books that might get you going the "easy way":

- Eric Freeman, Elisabeth Robson (2011). Head First HTML5 Programming – Building

Web Apps with JavaScript. O'Reilly Media • ISBN: 978-1-449-39054-9

Assumes only basic knowledge of HTML and CSS; Javascript knowledge is not assumed but helpful • Description at O'Reilly - Jeremy Keith (2010). HTML5 for Web Designers. A Book Apart • ISBN:

978-0-9844425-0-8

Description at A Book Apart - Dan Cederholm (2010). CSS3 for Web Designers. A Book Apart • ISBN:

978-0-9844425-2-2

Description at A Book Apart

Final Word

Particularly, the "A Book Apart" books are short and concise and therefore allow you to pick up speed quickly. They can often be read within a day. The other two books take more time. The Head First book also invites you to some practical exercises, taking you through the process of building a Website using HTML5 and exploring all the new HTML5 elements, including geolocation, canvas and video, local storage, and Web workers. I found the following reviewer comment about this book: "There's a saying here in the UK: 'It's a bit like Marmite, you either love it or hate it!' Well I think this applies to Head First books – people tend to love them or hate them." I personally am still undecided. This book probably not that useful for people looking for a concise introduction or a reference. Even though it does not provide a reference, Keith's book, HTML5 for Web Designers, is probably better-suited to these readers. The same applies to Freeman and Robson's book about CCS3.

References

Are provided in the article...

November 28, 2012: Reviewing an E-book – Another Experience Report

My recent review of Rachel Hinman's book, The Mobile Frontier, was my third review of an e-book. It was also the first one for which I used a tablet computer – an iPad – for reading and annotation. However, I wrote the review itself on different laptop computers. In the following, I would like to share some of my experiences in this endeavor.

Reviewing a book takes quite a while, several weeks or more, and the process is typically hampered by unexpected and often long interruptions. Therefore, when I read a book for a review, I highlight relevant text passages and sometimes also add comments so that I can easily pick up where I left off after an interruption. Moreover, there is also often a longer break between reading the book and writing the review. If I did not mark relevant text passages in the book, it would appear to me as if I had never read it. In printed books, I use a marker pen for the highlighting; for e-books, I had to switch to "electronic" highlighting. This is where the challenges began...

Highlighting Text

While conducting my first purely e-book review, a review of Analyzing Social Media Networks with NodeXL by Derek Hansen, Ben Shneiderman, and Marc Smith in 2010, I encountered the issue that Adobe Acrobat Reader did not allow me to highlight text passages in the PDF version of the book (see this UI Blink for details). However, I found a work-around by using the Mac OS X Preview application. This time around, I hit on the same issue on the iPad: The e-book reader, iBooks, does not allow you to highlight text passages in PDF documents with a marker tool either. This restriction required me to additionally download the ePub version of the book. Incidentally, Mac OS X does not offer a reader application for this format. I had to download a free ePub reader for the Mac and chose calibre.

On a laptop computer, I use the mouse to select text for highlighting. This is easy because I am well practiced in using it. However, selecting text on the iPad with my fingers was tedious, to say the least. Often, I needed several attempts before the text was selected and marked the way I wanted it, which slowed down my "average" reading speed considerably. My finger tips are just not small enough for this. Perhaps I should use a dedicated iPad pen?

Paging and Navigation

PDF versions of books typically present pages as they are printed (a "screen version" may also be available, as is the case for Nathan Shedroff's book, Design is the Problem, which is the first e-book that I reviewed). E-books in ePub format, however, follow a different approach, creating screen pages dynamically according to the formatting options (such as font type and size) that the user has selected. Depending on these settings, Hinman's book, with its 281 pages in PDF format, can have between a few hundred and several thousand pages when viewed in the iBooks app in ePub format. Navigating within e-books with so many pages, can become confusing at times... Moreover, printed page numbers in tables of content or indexes of course make little sense, but can still be used for navigation if they are links.

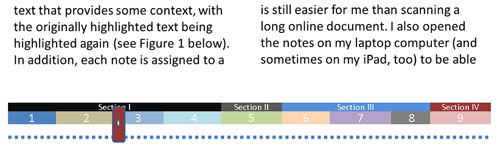

Figure 1: E-book slider that indicates sections and chapters in the book – a first idea...

You have quite a few options for navigating within an e-book: you can scroll page-wise scrolling the content; scroll using a slider at the bottom or right (for the continuous vertical scroll mode in iBooks); use the search function; or resort to the table of contents or the index. But none of these options makes me really happy. Somehow, the "direct" and "intuitive" NUI interaction is too slow and indirect for me. This is probably just a question of practice though... For example, I find page-wise scrolling in e-books "sticky" and more cumbersome than paging through printed books where you can flip pages at high speed to get where you want to go. Sure, depending on your viewing mode, you can scroll using the bars at the bottom or to the right to move faster through the book, but the pages themselves do not flip to provide orientation – only the page numbers and chapter titles change. This is not the user experience that I would like to have and that a book provides. Moreover, in the iBooks app, chapter titles do not indicate their level and are not always informative (they can, for example, be ambiguous). I also find it also "fiddly" to get exactly where I want to go. Usually, I have to correct my "landing position" by scrolling a few pages back and forth. So, here comes a personal design proposal: I would like to have a scrollbar with different colors or gray levels that indicate the book chapters or sections, easy-to-hit anchor points for the chapter/section beginnings, and perhaps some sort of zoom mechanism for "tuning in" on the target page. Of course, a real-time animation of the flipping pages would be welcome, too, but this would probably require a 32-core processor...

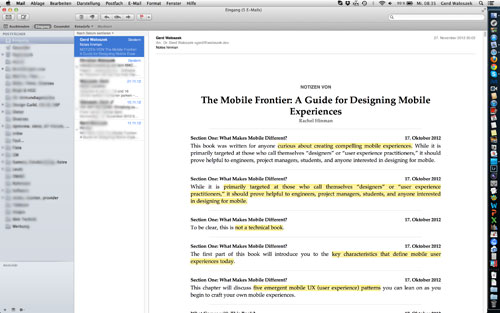

Harvesting the Notes

Having read a book, I scan it for marked text passages and comments to base my review on or potentially include in it. With "electronic marks" at my disposal, I wondered whether it would be possible to collect all the notes and also export them to another computer. This was indeed the case. In the collection, each note is surrounded by text that provides some context, with the originally highlighted text being highlighted again (see Figure 2 below). In addition, each note is assigned to a chapter or subchapter in the book. This looked very helpful to me at first sight. However, wrong assignments to chapters or subchapters caused a lot of work for me, because I wanted all the notes to be assigned to their proper locations in the book. I corrected the wrong assignments after I had exported the collection as an e-mail – the only offered option – and created an MS Word document from it on my laptop computer. Finally, I printed the notes out, because browsing through paper is still easier for me than scanning a long online document. I also opened the notes on my laptop computer (and sometimes on my iPad too) to be able to search for specific words or to copy citations correctly into the review.

Figure 2: My collection of highlighted text passages in Hinman's book

Preliminary Conclusions

Did all the effort make preparing the book review easier and faster for me? In the end, I do not think so. I felt lost in the huge amount of notes that I had collected and struggled for orientation. Luckily, Hinman includes chapter summaries in her book, and my notes from the summaries (which I transferred to a separate document) allowed me to eventually gain an overview of the book's content. Based on this experience, I think that I still have a lot to learn and I certainly need more practice in order to develop a smooth workflow for my e-book reviews.

References

- Rachel Hinman (2012). The Mobile Frontier: A Guide to Creating Mobile Experiences. Rosenfeld Media • ISBN: 1-933820-55-1 (Paperback), ISBN: 1-933820-05-5 (Digital editions) • Review

- Derek Hansen, Ben Shneiderman & Marc Smith (2010). Analyzing Social Media Networks with Node XL. Morgan Kaufmann • ISBN 13: 978-0123822291 • Review

- Nathan Shedroff (2009). Design Is the Problem. Rosenfeld Media • ISBN: 1-933820-00-4 (Paperback + PDF), ISBN: 1-933820-01-2 (2 PDF editions) • Review

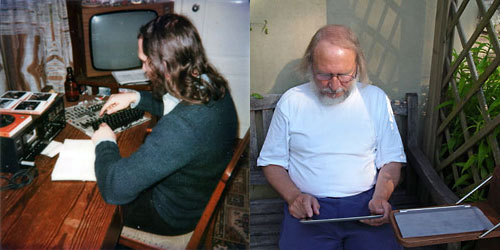

November 8, 2012: From GUIs to NUIs – The Torch Relay of "Direct" and "Intuitive" UIs

I have been working with computers for decades, having started out preparing

punch tapes and cards for input and taking home heaps of computer paper

in the early 1970s. As the era of micro and personal computers dawned,

I bought my first computers and was happy to finally have them on my desk

for my own use. These computers already featured a keyboard and a screen

and allowed me to handle them interactively through a command line interface

(CLI). In the mid-80s, I eventually and somewhat reluctantly acquired my

first computer with a graphical user interface (GUI), a demonstration model

of the Apple Macintosh 128k (don't ask me for the price...). Over the years,

my relatives, friends, and colleagues – actually, the whole world – joined

me and other forerunners in buying computers with GUIs. Why did we all

buy computers with GUIs? We bought them because we were told that these

machines were easy to understand and intuitive to handle thanks to the

GUI. Interacting with them was promoted as being as "direct" as if we were

handling physical objects in the real world. Ben Shneiderman once coined

the term "direct manipulation" for this interaction style. For a long time,

computers had been regarded as "experts only" devices. Now, the

GUI's "WIMP" (windows, icons, menus, and pointer

Figure 1: My first computer, still with a CLI, and my first encounter with NUIs (right) – fingers seem to be needed for both computers...

Nearly thirty years later, Rachel Hinman indicates in her 2012 book The mobile Frontier that all this seems to be wrong. She got her inspiration from a workshop held by Microsoft's Dennis Wixon who co-authored the book Brave NUI World. There, Wixon presented a brief overview of the history of computing paradigms, which, omitting the tape and card (and even switch) phases, started with CLIs, moved on to GUIs, the upcoming UI paradigm of natural user interfaces (NUIs) (Hinman sees us on the verge of a paradigm shift), and finally to organic user interfaces (OUIs) that will take over in a not so distant future. In her book, Hinman presents a tabular sketch of the UI paradigms, and here is a commented excerpt of it:

UI

Paradigm |

||||

| CLI | GUI | NUI | OUI | |

| Psychological Principle | Recall | Recognition | Intuition | Synthesis |

| Interaction | Disconnected (abstract) | Indirect | Unmediated (direct) | Extensive |

| My comment | Can be very efficient for professionals | Can be very inefficient when using the mouse only | Can lead to perplexing effects when you touch the screen inadvertently | No idea... |

For me, through Hinman's book all of a sudden GUIs had become "non-intuitive" and "indirect", whereas "direct" and "intuitive" handling is now attributed to NUIs.

I have to admit that, after my first encounters with mobile systems (in the incarnation of an Apple iPad), I am far from believing that NUIs are intuitive. Actually, I also never believed in the myth that GUIs are intuitive. It's all a matter of conventions and "cultural" traditions. It took me quite a while to become familiar with the effects of single, double, and even triple mouse clicks. And it will again take some time before I find out what all the swipes and other gestures will do for me. At the moment, I am still in a learning state for NUIs. By the way, the NUI motto is: "What you do is what you get" (WYDIWYG) – which can be quite surprising and even perplexing at times if you are a newcomer.

Whenever I see photos of children or gorillas using tablet computers, suggesting that they are so easy to use, I get annoyed. My young nieces and nephews could also use my Mac long ago for simple things even though they were not able to read. But they were never able to help me, for example, with my spreadsheet data. And I guess that the gorilla also cannot tell me how I can move my photos and data files from my iPad to my laptop computer. I had to consult the Internet on this matter – the manuals were of little help to me.

So, what's next? In about twenty to thirty years, when OUIs will have caught on, people will tell us that these are the really intuitive interfaces because "input = output" and thus, handling is "direct" – no pointing with fingers to virtual objects on a flat screen. I hope that I will still be in good shape around the age of 90 to experience all this. But perhaps I should skip that stage, try to reach the age of 120, and find computers that herald the motto "What you think is what you get" (WYTIWYG). But isn't such a computer already built into my body???

References

- Rachel Hinman (2012). The Mobile Frontier: A Guide to Creating Mobile Experiences. Rosenfeld Media • ISBN: 1-933820-55-1 (Paperback), ISBN: 1-933820-05-5 (Digital editions) • Review

- Widgor, Daniel & Wixon, Denis (2011). Brave NUI World – Designing Natural User Interfaces for Touch and Gesture. Morgan-Kaufmann • ISBN-10: 0123822319, ISBN-13: 978-0123822314

October 16, 2012: Fitting a Device to Usage Habits – A Usability Lesson

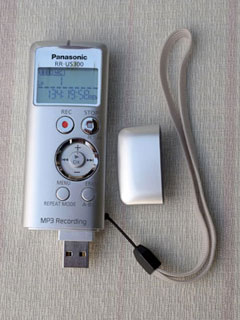

My wife usually keeps a diary when we are on vacation. On our hiking tours, she now and then takes notes in it for later use. Because this is cumbersome and holds us up, years ago we experimented with a voice recorder. But we found it too awkward to handle for regular use. This year, we decided to buy a new one with better handling that also allows us to download the sound files to a computer. Regrettably, you cannot download files to the iPad, which I wanted to take with me to store photos. So we had to live with the limitation that the audio recorder cannot hold more than 199 audio files, regardless of how much memory they use. This UI Design Blink tells the story of how we got along with the device, its options, and its limitations.

By the way, one of the first things I did when I set up the audio recorder for my wife, was to mute the beep on each key press. My wife found it annoying and unnecessary.

Initially, we had no clue what the limitation to 199 audio files would mean to us, because we did not know how many notes my wife would take in the course of a day. But I suspected that we would have to delete the files after one or two days before she could record new notes. This proved correct. In anticipation, we looked for a strategy that would allow us to use the recorder without having to delete files so often. I suggested creating only one file (or only a few files) per day and pausing the recording after each note instead of stopping it. Pausing is indicated by a flashing REC symbol in the display, and the red recording LED at the top also blinks during pauses. However, you can only see the LED if you are in dark forest, so it was mostly useless for our purposes. To pause the recording, my wife had to press the Record button again, not press Stop. To resume recording, she also had to press Record. Thus, using this strategy, she had to press Record all the time, and press Stop only at the end of the day or for longer breaks.

|

|

Figures 1-2: The audio recorder; The recorder in action

My wife gave this a try on our first walk, and it seemed to work well. Our pleasure was short-lived, however. After about an hour, my wife discovered that she had created a file of more than 40 minutes length, which reproduced our walking noises and our talks – although not very clearly because all this was recorded from within her pocket. On the other hand and to her great dismay, all the notes that she had added in the meantime were missing. What had happened? At one moment in time, my wife had gotten "out of sync" by not pressing Record hard enough. From then on, she had created pauses when she thought she was taking notes and had recorded pauses instead. After we had analyzed the issue, my wife decided to continue with that procedure and revolved to observe the display more carefully. This seemed to work, and she got along with creating only three or four files that day. So, this procedure would have allowed her to record comments about our entire vacation without deleting any audio files.

Nevertheless, the following day we changed the procedure because my wife found it awkward and error-prone. From then on, she created a new file for each note. In the course of the day, she remarked that pressing two different buttons was easier, and she observed that she was pressing Record harder when she wanted to record something. I was relieved that, at last, everything was working smoothly. The new strategy required me to delete the audio files from time to time. Luckily, the recorder provides a command for erasing them in one step. Regrettably, this did not allow us to keep specific files where my wife had recorded, for example, dogs barking or cocks crowing.

My satisfaction lasted only two days. Then, on another walk, my wife discovered that, once more, quite a few notes were missing. This time, she was really dismayed and proclaimed that she would no longer use the audio recorder. I was sad and cross – sad because of the loss of notes, cross because we had repeatedly discussed visually checking whether the recording had started. After we had both calmed down, we came to two conclusions: (1) From time to time, the Record button did not react to my wife's press, which might have been the button's fault, not hers. (2) She rarely checked the display to see whether the recording had actually started. This procedure was too cumbersome and time consuming for her – she just wanted to pull the device out of her pocket, press Record, and start speaking.

I considered this for a moment. Then I pressed the audio recorder's Menu button and reactivated the beep that echoes each key press. This "fix" worked more or less for the remainder of our vacation. Eventually, an initially annoying feature came to our rescue and allowed us to fit my wife's usage pattern to the device's limitations. For me, the experience was a real eye-opener and lesson in usability.

Leaving aside the issue with the number of files it can store, the question arises whether the audio recorder was perfect for its intended use? Of course, it was not. For example, if different keys produced different sounds, the resulting – up-down or down-up – "melody" would tell my wife immediately whether she had hit the correct button or was out of sync. The recorder's simple universal beep is definitely archaic and leaves room for usability improvements. A future firmware update could make different sounds easily available, but I guess this will never happen.

After Word

After I had finished this article, it came to my mind that different sounds for the keys would have allowed my wife to use the first strategy successfully. She told me afterwards that she had already had the same idea. In the third millennium, assigning spoken commands like "Record", "Pause", and "Stop" to keys to replace the ambiguous beeps should not really be a problem. I would be content with English commands. And in the fourth millennium, or perhaps a little bit earlier, it might even be possible to assign your own spoken commands to the keys – but these are just dreams...

October 12, 2012: Designing for Beginners and Experts – Reviving an Old Design Proposal

In a previous UI Design Blink, I pointed to the design practice of arranging figures and explanatory text closely together, and justified it with the contiguity effect described by the cognitive load theory. However, the same theory's redundancy effect implies that this practice can be detrimental for users for whom a figure is self-explanatory (I will call them "expert users" in the following). Therefore, I asked the obvious questions: "How can designers know in advance if their users are experts, casual users, ore mere beginners? And if designers do not know their audience, how can they address this dilemma?" In this UI Design Blink, I primarily focus on the second question and revive an – admittedly, old – design proposal. Please note that the topic of designing for different user groups in general is a huge field in itself, which would definitely exceed the scope of this article.

When designers know their audience, they can act appropriately. For example, they can place descriptive text close to figures* for beginners and casual users or show figures alone for expert users, as I have described in the above-mentioned article. They can also display different amounts of text explanations on the screen – we experimented with this approach at SAP. But if designers do not know their audience, they either need to design differently for each prospective user group, for example, by applying the mentioned design principles in different versions of their application, or they face the challenge of finding a solution that more or less fits all. The latter is the preferred approach as it promises to cause less effort for the developers. In the following, I will turn to such "universal" solutions, but note that they still require some additional effort. The question is, however, on whose side the effort will be.

One possible line of attack originates from a fundamental difference between printed matter and – my focus here – software. The first is static, whereas screens realized in software can also be dynamic and thus, give designers more freedom than static media. Tooltips are a well-known example of a "dynamic" strategy for supporting beginners and casual users: When users move the mouse over an object on the screen, a tooltip appears showing an explanatory text. Tooltips are the perfect solution to the beginners-experts issue, because beginners can point the mouse to those objects on the screen that need explanation, while experts can simply ignore tooltips. I personally do not like tooltips, though, because pointing requires physical effort, and if you need explanations for more than one object on the screen, pointing becomes a sequential process that takes time. (There are more disadvantages to presenting explanations only sequentially, but I will skip them here.)

To alleviate the mentioned issues, one might display all explanations at once. Moreover, similar to printed material, a parallel presentation would allow users to easily compare related objects. This approach usually requires putting the application in a "help" state in which users cannot continue to work (the user needs to issue a menu command or press a dedicated key combination to put the application in this state). I encountered a few applications that had adopted this approach many years ago. Obviously, this approach did not persevere as it was too cumbersome for users, plus it was not applicable to Web pages and applications.

Nonetheless, I would like to revive this approach and pull an old design proposal of mine out of the drawer. It was too difficult to realize technically when I came up with it in 1998. I suggested adding an "explanation overlay" to critical screens, which can be turned on and off using a dedicated key (such as the Windows key; either used as a toggle key or as a switch – photographers will know this as "T" and "B"), and which does not prevent users from working with an application. Today, transparency has become commonplace and therefore, implementing an overlay screen should no longer face any technical obstacles. Overlays could either be delivered by the software manufacturer (which makes little sense in the case of ERP screens, which are typically customized at customers' sites), designed by consultants or system administrators, or even be created and modified by the users themselves. In a simple version, overlays could be designed with a text tool for writing and placing annotations. A more advanced version would also offer simple drawing tools like lines and arrows, brush and pen of different widths, and colors for all drawing and text elements – just like in a drawing or presentation program. With the advanced version, we would probably leave the realm of cognitive load theory and its statements on text explanations, because it also allows you to indicate dynamic aspects such as screen flow, dependencies between fields, or proposals for input values. See Figures 1 and 2 for an example of the original proposal:

|

|

Figure 1-2: Original R/3 screen (left) and with overlay screen showing screen flow and suggestions for input values (right) (both screens from 1998 and in German)

All in all, overlay screens would support beginners and casual users, while experts could easily ignore or adapt them to their own purposes.

Of course, this proposal represents just one of many possible approaches to the beginners-experts issue, or the design for different user groups. It is a dynamic approach in that users need to activate the overlay (itself static), and it shifts the effort in the direction of the administrators and users once the technology has been provided by the software manufacturer. I did not apply for a patent in 1998, nor will I now, but I guess I am not the only one who came up with this idea.

Technical Note: My original proposal for an overlay screen was based on bitmap graphics. I created the prototypical screens in Adobe Photoshop using layers, drawing tools like brushes, and a small graphic tablet. A current version would instead use vector graphics, layered objects that can be freely arranged, and a number of useful drawing and text tools.

*) Note: For this article, I would like to broaden the term "figure" to "anything object-like on a screen that needs explanation." I assume that the implications of the cognitive load theory still hold, provided that the texts are simple descriptions and not, for example, content summaries.

References

- Wikipedia: Cognitive Load Theory

- Online Learning Lab (OLL), University of South Alabama: Cognitive Load Theory

October 10, 2012: Sushi Rolls and the Effects of Contiguity and Redundancy

In this UI Design Blink, I present a design principle that is based on a psychological effect – I will disclose its name below – and typically adhered to by designers. Sometimes it is violated, though, because of, for example, what I once called "careless design." At the end of this blink, I will show that there are even cases, where designers should violate it because of another psychological effect.

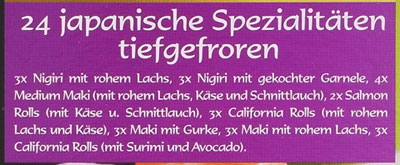

I would like to introduce the design principle by recounting a recent incident at my home. My wife and I were longing to eat sushi rolls for supper. Luckily, our desire could be satisfied because our refrigerator still contained a package of frozen sushi rolls, which we took out to thaw (note, however, that you need to do this in time...). I grabbed the empty package and looked at it to see what it had contained. The front boasted a large photo showing all 24 sushi rolls nicely set out on a plate. There was also a description of how many pieces of each roll type were the package contained (see Figures 1 and 2).

Figure 1: The complete package with a photo of the sushi rolls on the front and a separate description of how many sushi rolls of which type are in the package

Figure 2: The description of the package content enlarged (in German)

However, not being an expert in sushi rolls, I was unable to assign the names of the sushi rolls to the pictures (additional information like the quantities of the different roll types did not really help me because several types were provided in the same quantities). I told my wife about my failed attempt at identifying the rolls but she didn't believe me and just laughed at me. However, after she had tried to do this herself she had to admit that she couldn't work it out either.

What went wrong here – at least for people who are not experts in sushi rolls? The package designers did not observe a basic principle: They did not arrange the descriptions close to or on the pictures. Alternatively, they could have placed the names around the picture and used lines or arrows to connect them to the samples in the photo. Either way I would have known immediately how each sushi roll type looks. Just to illustrate this, here is a quick-and-dirty example of how this might look:

Figure 3: The package with added names to identify typical sushi rolls

Although violated in this example from daily-life, the principle of placing visual information and its description close together is usually common design practice, and I asked myself, "Which theory supports this practice and how does it justify it?" I faintly remembered that I had stored this principle in the innards of my brain as the "locality principle," but felt unsure about its background. However, when I searched the Web for this term, I mostly found references from computer science that have a completely different meaning. Then I remembered that a colleague of mine had recently held a presentation about cognitive load theory for our team. This theory is primarily applied to the design of instructional materials to make learning easier. My colleague had encountered this theory when she did research on online learning materials at university. She pointed out that it is also applicable to user interface design and, as my example above demonstrates, to any kind of information design.

I searched the Web for references to the cognitive load theory, and learned that it basically "starts from the idea that our working memory is limited with respect to the amount of information it can hold, and the number of operations it can perform on that information" (from OLL, University of South Alabama). There, I also found a number of design implications of the theory. One of them is the contiguity effect*, which means that "people learn better when you place print words near corresponding graphics." Wow, I had finally found a name and a justification for the design principle that I, up to now, had understood only intuitively: The design principle of putting graphics and text close together is based on the contiguity effect postulated by the cognitive load theory.

However, as always, things are not as simple as they originally seemed to be. The cognitive load theory has another implication, called the redundancy effect*. It states that "simultaneous presentations of similar (redundant) content must be avoided. Avoid words as narrations and identical text with graphics" (from same source). This reads like a direct contradiction to the contiguity effect, but, as I also found out, is valid only for self-explanatory texts. Thus, with respect to the optimal placement of figures and associated text, designers are in a dilemma:

- If a figure is self-explanatory (here people are in an "expert" state), research data indicates that processing the additional text unnecessarily increases working memory load.

- If the text is essential to intelligibility (here

people are in a "learner" state), placing it on the figure

rather than separately will reduce the cognitive load associated with

searching for relations between the text and the figure

(both from OLL, adapted).

In the sushi rolls example above, my wife and I were definitely in the "learner" state, and we would have appreciated descriptions close to the pictures. As an example of the "expert" state, it is detrimental to our understanding if a presenter reads the text that is on his or her slides because the audience tends to compare spoken and written text and is thus distracted from understanding the content (one might say that the comparison activity reduces working memory capacity). Recently, I experienced such a case during a presentation myself and found the redundancy indeed distracting.

But how can designers know in advance in which state their target audience will be? Sometimes they are lucky enough to know this, but in general they don't. It looks as if there were no short answer to this question. Therefore, in a forthcoming UI Design Blink, I will take a closer look at how designers might deal with this dilemma.

*) Note: These terms were introduced by different researchers under different names using different, but related theories; often, however, both theories are mixed together in the literature. Here is an overview of the related theories and the names of the effects or principles in each theory:

| Researcher | Theory | Effect 1 | Effect 2 |

| John Sweller | Cognitive load theory | Split-attention effect / principle | Redundancy effect / principle |

| Richard E. Mayer | Cognitive theory of multimedia learning | Spatial/temporal contiguity effect / principle | Redundancy effect / principle |

| Remarks | Both theories are applied to multimedia learning | Mayer lists two different kinds of the contiguity effect | Note that for simple materials the redundancy effect is less prominent for experts |

Alternative:

| John Sweller | Richard E. Mayer | Remarks | |

| Theory | Cognitive load theory (CLT) | Cognitive theory of multimedia learning | Both theories are applied to multimedia learning |

| Effect 1 | Split-attention effect / principle | Spatial/temporal contiguity effect / principle | Mayer lists two different kinds of the contiguity effect |

| Effect 2 | Redundancy effect / principle | Redundancy effect / principle | Note that for simple materials the redundancy effect is negligible for experts |

References

- Wikipedia: Cognitive Load Theory

- Online Learning Lab (OLL), University of South Alabama: Cognitive Load Theory

September 14, 2012: Let the Graph Tell Us the Answer

This morning, a colleague of mine made me aware of Jeff Sauro's blog and provided me with a link to it. I soon learned that some other colleagues from our user research team also know Sauro and his blog articles on methodological topics. Therefore, I decided to add him to the SAP Design Guild people list and his blog to the list of design columns.

Then, I took the time for a quick look at Sauro's blog and scanned the titles of his articles. And indeed they deal with usability testing, heuristic evaluation, personas, and other method-related topics. Sauro's latest article, entitled Applying the Pareto Principle to the User Experience, attracted my attention in particular, because I felt that I had heard the name "Pareto" before, but I was not sure what it was about. An image showing "80/20" suggested that it is related to the 80/20 rule, called the "Pareto principle" by Joseph Juran, and this proved to be the case. However, for details on the Pareto distribution and principle, I would like to refer you to Sauro's article. In short, the generalized Pareto principle is often formulated as follows: Roughly 20% of the effort will generate 80% of the results.

The Pareto principle also applies to software. For example, when users are asked in usability tests what one thing they would improve about a Website or software, and these open-ended comments are converted into categories, the resulting frequency graph shows a Pareto distribution (see Figure 1 for an example). The same applies to formative usability testing where researchers are looking to find and fix usability problems. When researchers log which user encounters which problem in a user-by-problem matrix, this also results in a Pareto distribution. The graph in Figure 1 below, based on one in Sauro's article, shows the problems users encountered while trying to rent a car from an online service. The researchers observed 33 unique issues that were encountered a total of 181 times.

Sauro states that "nine problems (27%) account for 72% of all poor interactions", but I asked myself, "How can I see that in the graph?" I decided to play around with the data and to look for a graph that can answer this question easily. First I copied Sauro's graph into Photoshop, measured the pixel height of the columns, and put the data into an Excel spreadsheet. This allowed me to show you the Pareto distribution without the need to use Sauro's original graph (Figure 1):

Figure 1: User-by-problem matrix showing a Pareto distribution (vertical axis: percentage of users, horizontal axis: usability problems by ID)

The first idea for an alternative visualization that came to my mind was to use a pie chart instead of a multiple bar chart (Figure 2):

Figure 2: Pie chart illustrating how many problem encounters account for which fraction of the total number of encounters

The pie chart in Figure 2 illustrates roughly how many problem encounters account for which percentage of the total number of encounters – the whole pie represents 100% (or 181 encounters). I might have added a scale at the circumference to make the chart easier to read. I did not add any labels to the chart because I just wanted to illustrate the principle. At least, we can fairly easily recognize that four problems account for nearly 50% of all poor interactions.

However, the pie chart did not satisfy me fully, so I looked for a graph that is even better suited to answering my question – and similar ones. Finally, I ended up with a stacked bar chart that like the pie chart adds up to 100%. After some fiddling around with the Excel bar chart (I had to switch row/column), I arrived at this result (Figure 3):

Figure 3: Stacked bar chart illustrating how many problem encounters account for which percentage of the total number of encounters

Of course, a simple line would serve the same purpose as the stacked bar chart, but the bar looks nicer in my opinion. Moreover, the stacked bar chart makes it easy to answer arbitrary questions like, "How many problems account for X% of the poor interactions?" Now it is easy to verify Sauro's statement that nine problems account for 72% of all poor interactions.

References

- Jeff Sauro (September 12, 2012): Applying the Pareto Principle to the User Experience

- Jeff Sauro's blog

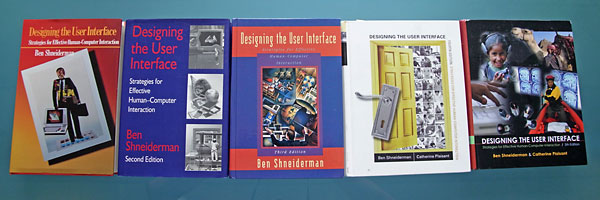

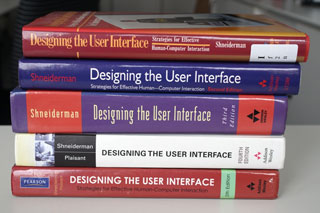

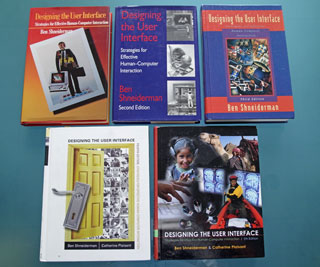

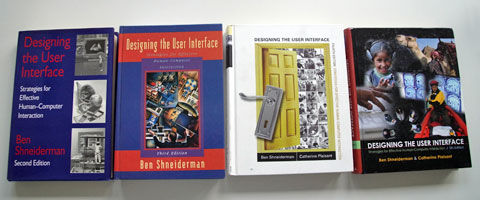

September 12, 2012: Completing My Designing the User Interface Collection – at Least for the Time Being

Yesterday, the postman finally delivered the first edition of Ben Shneiderman's classic textbook Designing the User Interface. This completed my Designing the User Interface collection – at least for the time being. I had ordered a used copy of the first edition from a book store, hoping that it would be the correct version. It did indeed turn out to be the first edition, but true collectors will probably be quick to point out that it is not the "real" first edition, because it is a reprint with corrections from November 1986 (copyrighted for 1987). Personally, however, I do not mind because I was interested in the content, not in having a collector's item. And the 1986 corrections are welcome. My collection now looks like this:

It was not easy to find the original publication date of the first edition. I searched Worldcat – Formats and Editions of Designing the User Interface and found several references to 1987 publications and one to a version from 1986; however, the respective link also leads to a 1987 version. Anyway, I thought that it would be a nice exercise to relate the publication dates of the editions (which span more than 20 years) to events in my own computer and usability history:

- First edition (1986/7): Moving from the command-language- and character-based TRS-80 computer (finally, a Model 4p) to a used original Macintosh 128k and thus, to the world of graphical user interfaces. From then on, I never programmed in assembler again.

- Second edition (1992/3): Moving from the university to SAP (1993). In 1992, I attended a usability workshop held by the German computer science society (GI) and I met some future SAP usability colleagues. In 1993, I started working in SAP's usability group and edited the R/3 Styleguide (see the guidelines archive on the SAP Design Guild).

- Third edition (1997/1998): "Enjoy" initiative at SAP (1998). It introduced the frog GUI and a new application design to R/3; SAP also cooperated with external consultants such as Karen Holtzblatt and Alan Cooper (see the Philosophy edition on the SAP Design Guild for details).

- Fourth edition (2004/2005): From User Productivity to SAP User Experience. Dan Rosenberg took on the leadership of the newly established SAP User Experience team in 2005 (until April 2012).

- Fifth edition (2009/2010): Tenth anniversary of the SAP Design Guild (2010)

Here are two more views of my Designing the User Interface collection:

|

|

As already mentioned in Rounding Off My Designing the User Interface Collection four weeks ago, this addition to my collection will probably not be the last. I am convinced that Shneiderman and his co-authors are already busy with a sixth edition. Considering the intervals between the previous publications, which range from five to seven years, we should expect the sixth edition in 2014 or 2015. But only the authors know whether my prediction is correct...

P. S.: Ben Shneiderman suggested that I also look for an even older book of his, Software Psychology: Human Factors in Computer and Information Systems, from 1980 (at that time, I may have owned my very first microcomputer, a OHIO Superboard). However, it looks as if acquiring a copy of that book will be a challenge of some magnitude.

References

- Ben Shneiderman, Catherine Plaisant, Maxine Cohen & Steven Jacobs (2009). Designing the User Interface: Strategies for Effective Human-Computer Interaction (5th Edition). Pearson Addison-Wesley. ISBN-10: 0321537351, ISBN-13: 978-0321537355 1 • Review

- Ben Shneiderman & Catherine Plaisant (2004). Designing the User Interface (4th Edition). Pearson Addison-Wesley. ISBN: 0321200586 (Hardcover) • Review

- Worldcat – Formats and Editions of Designing the User Interface

September 1, 2012: Design Thinking, Interaction Design, and UI Design

When writing an SAP Design Guild article about Design Thinking, I hit on the question of how Design Thinking differs from other design disciplines, such as interaction design and my own domain, UI design (which regrettably and confusingly comes in many guises and has many names). Initially, I had planned to publish my thoughts and observations spurred by the question at the end of my article. But this would have made an already long article even longer. Not only for this reason but also to keep the more personal style of discussion, it is probably more appropriate to offer my thoughts and observations in the form of a UI Design Blink. I will start it with a prelude contrasting two somewhat oversimplified "archetypes" of interaction design and UI design.

Prelude: UI Design Versus Interaction Design

In several articles for the SAP Design Guild (see, for example, here), I have discussed the relationship between designing user interfaces for software* (UID, UxD) and the more general interaction design (IxD), which is more oriented toward designing physical artifacts (although software typically drives the artifacts). I have observed that many interaction designers still adhere to the model of the "genius designer" or "artist designer" and do not follow, or even reject, a user-centered design approach. A "prototypical" interaction designer might teach and work at an art school or university and, together with students, build exploratory artifacts that are meant to provoke or enlighten users and to stimulate critical thought.

Designers of user interfaces for software (UI designers, for short) on the other hand, have a strongly user-centered point of view. They feel and act as user advocates, and often follow a research-oriented approach in their work. User and task constraints are not the only constraints on their designs. On the one hand, international norms and UI guidelines, which are different for each platform and application, and at each company, limit their "creativity" severely. On the other hand, their designs are also confined to the options that the underlying technical platform makes available. All this makes me feel that working as a UI designer lies somewhere in the middle of a continuum between art (or design in the sense mentioned above) and science, specifically in the realms of craft or engineering, and leading to a mindset that definitely differs from that of a "real" designer.

*) Please note that some of the people who I would call UI designers call themselves interaction designers. In the end, everything is some sort of interaction design: In the case of the UI designers that I speak of, it is human-computer interaction (or computer-human interaction), while in the case of the "general" interaction designers it can be any kind of interaction between humans and designed artifacts.

Design Thinking Versus Other Design Approaches

Design Thinking brings the "designerly ways of working" from the world of design into the business world, and, as a general problem-solving approach, even to "any of life's situations." However, it differs from other design approaches by being user-centered and empirically-oriented: Design Thinkers observe users and their physical environments, confront them with prototypes, and feed the outcomes of their experiences back into the design. "Genius designers" would never do this; instead, they would confront or even provoke people directly with their designs. Some designers "throw" their designs at people and observe how they engage with the designs – they get empirical only "after the fact". Thus, Design Thinking seems to build a bridge between more "designerly ways of working" and more "user-oriented ways of working". Now, a natural question would be, "But what the heck is the difference between Design Thinking and UI design (or its siblings User Experience (UX) and User-Centered Design (UCD)) – aren't both user-centered?"

Design Thinking Versus UX/UCD

Many people do equate Design Thinking with User Experience or User-Centered Design. This is, in my view, an oversimplification. In some ways, Design Thinking is much broader in scope than UI design, which in the end is a highly specialized design discipline, yet in others it is much narrower in scope. By "broader in scope" I mean that Design Thinking is a general problem-solving methodology, which is particularly suited to generating a large number of new ideas. It can rightfully be regarded as a creativity method and as an approach to spurring innovation. Being a general problem-solving methodology, Design Thinking can not only be applied to the design domain itself, but also to any problem, particularly if it is ill-defined.

By "narrower in scope" I mean that Design Thinking by primarily being a creativity method cannot cover the wealth of methods and tools that UX/UCD has to offer in the course of the software development process. UX/UCD is based on the research discipline Human-Computer Interaction (HCI), which looks back at a history of developing empirical, user-oriented methods of more than 25 years. The methods used in UX/UCD form a mix of more scientifically-oriented tools and "best practices" that practitioners have developed in the field. Among these are tools that allow you to measure the ease of use and other characteristics of applications quantitatively and qualitatively. KPI studies, for example, provide reliable and reproducible numerical results that can be generalized over a wide range of software applications and also allow you to track improvements over time. Design Thinking methods, on the other hand, focus on spurring creativity and supporting experimentation, and not so much on providing "hard" results. But because Design Thinking is user-orientated, there is a natural overlap with UX/UCD methods, particularly early on in the design process when the problem is defined and later when ideas, that is, potential solutions, are tested by users (see also my SAP Design Guild article on Design Thinking for a side-by-side list of methods). .

This simple difference gives you some idea of the broader scope of UX/UCD: Several years of university study are required if you want to take up a UI-design-related profession, while Design Thinkers are trained in courses ranging from one day to one semester. One might say that the first is a profession, while the latter is a "mindset" – an important one, I would like to add.

Finally, while there is some overlap in UCD and Design Thinking methods, UX people approach the same problem very differently from designers: UCD people appear to be more serious, are method-oriented, and analytical, and are often perceived as the "design police" who spot design and guideline errors, while Design Thinking people are more playful and experimental, and highlight the "creative" aspects when looking for ideas – and in the end solutions.

Outlook

In the recent past, some designers have bemoaned the limitations of traditional HCI methods. The Internet and mobile devices have created new usage contexts, where "users" no longer seem to have goals or perform tasks (Janet H. Murray therefore speaks of "interactors"). Designers have to "go out into the wilds" to understand these contexts, but the methods currently available no longer fit. A more playful, creativity- and artifact-oriented approach like Design Thinking may come to the rescue and offer methods that are more appropriate in such "wild" contexts.

References

- DIS 2010 – A UI Design Practitioner's Report

- August 15, 2012: Users Don't Have Goals and Don't Do Tasks – Do They?

- Janet H. Murray (2012). Inventing the Medium – Principles of Interaction Design as a Cultural Practice. The MIT Press • ISBN-10: 0-262-01614-1, ISBN-13: 978-0-262-01614-8 • Review

August 16, 2012: Rounding Off My Designing the User Interface Collection

In my review of the fifth edition of Ben Sheiderman's classic textbook, Designing the User Interface, I wrote:

Three editions of the book are lying in front of me as I write this review (I hope to acquire the first and second editions one day). They demonstrate that this book is not only a standard in itself but also an interesting resource for investigations into the history and changing orientation of the user interface design field and community. However, such an investigation would require access to all five editions, because the changes between editions are subtle and each edition has been updated to accommodate the current UI design topics and trends.

Yesterday, I became one step closer to fulfilling my hope of acquiring all five editions of the textbook: The postman delivered the second edition. Here is the nice packaging:

And here is the story behind it: At the beginning of April 2012, a SAP Design Guild reader from Toronto, Canada, sent me an e-mail telling me that she had read the review and my remark in it. She wanted to give (or throw) away her copy of the second edition of Designing the User Interface and asked me whether I wanted it and would be able to figure out the easiest way she could get the book to me. I wanted it, indeed, but figuring out how to send it and pay for the postage took quite a while and a produced number of e-mails in both directions, interrupted by external events such as the UEFA EURO 2012 championship, which, of course, had absolute priority. But at the beginning of July, we had finally figured everything out, and the book could go on its long journey from Canada to Germany, which took nearly six weeks.

Thus, thanks to our Canadian reader, my current collection of "Shneidermans" looks like this:

Now, I hope that, one day, someone will offer me the first edition to complete my collection. But I am probably right in assuming that Shneiderman and his co-authors are already eagerly working on a sixth edition...

P. S.: In the meantime, I also got a response from Ben Shneiderman. He pointed out that the first edition has become "something of a collectors item and historic document", and he expressed his hope that I can get one. He also suggested to hunt around himself to see if he has an extra copy. However, I have decided to order a used copy of the first edition from a book store – hopefully it will indeed be the correct version.

References

- Ben Shneiderman, Catherine Plaisant, Maxine Cohen & Steven Jacobs (2009). Designing the User Interface: Strategies for Effective Human-Computer Interaction (5th Edition). Pearson Addison-Wesley. ISBN-10: 0321537351, ISBN-13: 978-0321537355 1 • Review

- Ben Shneiderman & Catherine Plaisant (2004). Designing the User Interface (4th Edition). Pearson Addison-Wesley. ISBN: 0321200586 (Hardcover) • Review

August 15, 2012: Users Don't Have Goals and Don't Do Tasks – Do They?

At the Interaction 2012 conference in Dublin, Ireland, I attended two presentations that both questioned the traditional HCI notion that users – of software and devices – have goals and do tasks. I assume that both authors positioned their presentations as "mild provocations" toward the design audience. Actually, I did not feel provoked by the presentations, but somewhat confused. In this UI Design Blink, I will explain why and also propose a "way out" of the confusion.

Users Don't Have Goals

Andrew

Hinton started his presentation, Users Don't Have Goals, with

a photo of his well-filled refrigerator (see the photo on the right for

a facsimile). Imagine that the "user" is standing in front of

the fridge and pondering what to eat. Hinton pointed out that, in this

context, he was not able to recognize any user goals. He contrasted this "goal-free" situation

with the HCI concepts and methods that have been developed and employed

for more than 25 years and are presented, for example, in famous books

such as The Psychology of Human-Computer Interaction, The

Design of Everyday Things, and About Face (see references below) – goals

and tasks are at the core of these publications.

Andrew

Hinton started his presentation, Users Don't Have Goals, with

a photo of his well-filled refrigerator (see the photo on the right for

a facsimile). Imagine that the "user" is standing in front of

the fridge and pondering what to eat. Hinton pointed out that, in this

context, he was not able to recognize any user goals. He contrasted this "goal-free" situation

with the HCI concepts and methods that have been developed and employed

for more than 25 years and are presented, for example, in famous books

such as The Psychology of Human-Computer Interaction, The

Design of Everyday Things, and About Face (see references below) – goals

and tasks are at the core of these publications.

I will skip Hinton's rant about traditional HCI and goals (see his public slides for details) and turn to his conclusions. There, Hinton admitted that sometimes users do have fully articulated goals. But he argued that designers should not start designing with that assumption. If, instead, they were to start by saying, "These users don't have goals... so how do I design for everything else?", he is convinced that "they would end up discovering contextual facets they would otherwise have missed, and that they would be satisfying more users than they would otherwise." Hinton also made an appeal for "designing for the fuzzy, desire-driven, pre-conscious, situationally complex area of people's lives, where our increasingly pervasive, ubiquitous, embedded products are available to people. ... It's where they are most relevant ... where desires and behaviors truly begin." I agree that this is an exciting and challenging new action field for designers, but I am not yet convinced that this has already become the core domain of my profession: the design of business software. Somewhat confused, I asked myself, "Should all designers now design 'goal-free'?" (Quotes taken from conference notes)

Real Users Don't Do Tasks

In her presentation, Real Users Don't Do Tasks: Rethinking User Research for the Social Web, Dana Chisnell took the same line when she questioned the usefulness of traditional HCI methods. However, he included an important restriction in the title: She referred to the social Web, not to typical desktop or even business applications. I wondered what she meant by "real users"? I assume that she was using this term to refer to the users that Hinton had in mind and who, indeed, far outnumber far the users of traditional software and of business software in particular.

|

|

|

|

Figure: The "holy" books of HCI that Hinton mentioned; Dana Chisnell's book is pictured on the right (in preparation)

Chisnell started her presentation by telling the audience that "the state of the art is a generation old" and that it is "time for a new generation of user research methods". She definitely knew what she was talking about, because she co-authored the second edition of the well-known Handbook of Usability Testing with Jeff Rubin (who wrote the first edition on his own). She added that "usability testing isn't telling us what we need to know for designing for social. ... People don't live in the world doing one task with one device out of context." I admit that in the business domain, we are lucky because usually the context is clearly defined, that is, the user's role, goals, and tasks as well as the business goal.

By the way, I heard similar arguments from Kia Höök in one of the keynotes at the INTERACT 2009 conference in Uppsala, Sweden. Höök talked about "going to the wild" and about pervasive games, a domain that is definitely very different from performing business tasks like processing a purchase order or even booking a flight on the Web (actually, pervasive games might even include such elements). Clearly, novel approaches and methods are needed to cope with open-ended domains, and, as the presenters pointed out, we are still at the beginning of developing the necessary methodology.

A Man of Straw?

These presentations were meant to create awareness but, in my opinion, they also built up a "man of straw". The "anti-goals-and-tasks" proponents made such universal claims that I felt confused and even irritated. Actually, I cannot discover any "real" contradictions. Yes, there are – in addition to the old scenarios – new, exciting, and challenging opportunities for designers. I agree to the message that when designers seize these opportunities and "go to the wild" or to "the complex area of people's lives" (or whatever label you wish to put on it), traditional HCI methods are no longer sufficient and novel approaches are needed. But this should not come as a surprise to us. On the other hand, I work for a company that develops business software, not pervasive games or social media. For these applications, starting from user goals and tasks still seems an appropriate and useful approach. Therefore, I do not agree that all of us should now dance around a new "golden calf". Nevertheless, it is a good idea to make designers aware of the limitations of the "goals and tasks" approach, because "times they are a-changin'" in the business world, too: business software is leaving the desktop and also "going to the wild" – which should be reason enough for UX people to check their methods inventory and prepare for the future.

A Way Out

Recently, I came across Janet H. Murray's textbook, Inventing the Medium, in which she criticizes the term "user" as being too limited for people who interact with digital media (which include software applications and mobile devices). She still refers to "users" when she talks about interactions that are based on the tool or machine model, as she calls it, because there the term "user" is established and useful. For all other contexts, she proposes the term "interactor" as "someone who is not so much using a device as acting within a system. Interactors focus their attention on a computer-controlled artifact, act upon it, and look for and interpret the responsive actions of the machine." She continues: "A user may be seeking to complete an immediate task; an interactor is engaged in a prolonged give and take with the machine which may be useful, exploratory, enlightening, emotionally moving, entertaining, personal, or impersonal. Interactors are also engaged with one another through the mediation of the machine, and with the larger social and cultural systems of which the automated task may only be one part."

When Murray states that "by designing for interactors rather than users we remind ourselves of the larger context of design beyond mere usefulness", she is not too far from Hinton's appeal for "designing for the fuzzy, ... , situationally complex area of people's lives."

While the term "interactor" may sound somewhat abstract and artificial, it might help avoid the confusion that the presentations described above – which are only examples – caused for me. The presentation titles would have immediately lost their provocative appeal if they had been phrased as Interactors Don't Have Goals and Interactors Don't Do Tasks, because they would have been more or less self-evident. All in all, I have come to the – hopefully universally accepted – conclusion that users have goals and do tasks, while interactors usually neither have goals nor do tasks. Designers should be aware of which group they are designing for – users or interactors.

Conclusion

In my opinion, the problem with statements like "users don't do tasks/don't have goals" lies in their absoluteness – probably as a reaction to the long-prevailing focus on goals and tasks. Users who have goals and do tasks may already have been outnumbered by the "interactors" of digital media, as Murray calls them, but they are still an important clientele – or fraction of "interactors" – whose needs UI designers have to address. And last but not least, designers of business software should also be prepared for new types of users – or interactors? – and scenarios in the future.

References

- Stuart Card, Thomas Moran, & Allen Newell (1983/86). The Psychology of Human-Computer Interaction. CRC • ISBN: 0898598591 (Paperback)

- Donald A. Norman (2002). The Design of Everyday Things. Basic Books • ISBN: 0465067107 (Paperback) • Review

- Alan Cooper, Robert M. Reimann, & Dave Cronin (2007). About Face 3.0: The Essentials of Interaction Design. John Wiley & Sons • ISBN: 0470084111 (Paperback) • Short presentation

- Andrew Hinton: Users Don't Have Goals (public slides)

- Dana Chisnell & Jeffrey Rubin (2008). Handbook of Usability Testing: How to Plan, Design, and Conduct Effective Tests (2nd ed.). Wiley • ISBN-10: 0470185481, ISBN-13: 978-0470185483 • Review

- Interaction 2012 – Again, a UI Design Practitioner's Report

- INTERACT 2009 – Research & Practice?

August 8, 2012: Now I Know What "Cloud" Means

This blink continues my reports on my experiences in the new mobile world. My experiences have also introduced me to a "world" that I have avoided up to now: The cloud and its specific incarnation, Apple's iCloud.

I got 5GB iCloud space for free for my new iPad. It allows me to synchronize my contact data, calendar, photos, documents, and more between my iPad and my other computers (actually, between any Apple computers). I decided to give the iCloud a try for my documents and contact data. For the latter, I use the Address Book application (called "Contacts" as of Mountain Lion). And indeed, thanks to the iCloud, my contact data from my laptop appeared on my iPad within seconds. I was amazed!

A few weeks later, I bought a new laptop – I had planned this acquisition for quite a while – and once again, my contact data appeared there with a blink of the eye (I chose to migrate my data manually because automatic migration via wireless connection would have taken between 120 and 150 hours). Again, I was impressed with how smoothly iCloud operated. The cloud approach began to look very attractive to me.

|

|

Now comes part two of my story. I have to admit that this part is not a success story – it is more of a "lessons learned" story. I wanted to sell my old laptop computer to one of my nieces and therefore deleted all of my programs and data, which took quite a while. Finally – it was already late at night – I deleted my contact data from the Address Book application, deleted my user account, shut down my old laptop, and waved good-bye to my trusty companion. (In retrospect, I do not know why I did it this way, because deleting my user account from the laptop might have automatically deleted all the contact data as well. It was probably because I am used to doing things in "orderly" fashion.)

I decided to relax a little before going to bed: I turned on my new laptop, and began to surf the Internet, when suddenly I got the urge to open the Address Book application. To my great dismay, all my contact data had vanished – thanks to the cloud, I immediately realized! I admit that shook me up then. Not only was my contact data lost, much worse was the fact that all of my personal access data for various Websites and the serial numbers of my software were also lost. Why? Because I had "misused" the Address Book application for storing this data as well. As a UI person, I know that some users "misuse" applications for purposes that designers do not think of – and now I was a "culprit" myself.

My last hope was that my iPad still held the data. I had turned it off in the evening and therefore hoped that the data had not yet been synchronized. But alas, when I finally managed to open the Contacts application, I once again stared at an empty address book – the cloud, or to be specific, iCloud, had been faster than me.

In hindsight, I of course "know" that I should have turned off the WiFi connection when deleting my contact data on my old laptop. But I hadn't been aware yet of what cloud synchronization means. Now I know what using the "cloud" can entail and will – hopefully – be more careful in the future.

|

|

There is a third part to my story. I would call it "partial success by accident". Long ago, I had installed Bento, a simple flat file database application, on my old laptop. I have never really used Bento, but updated it regularly. I also bought Bento for my iPad, because I thought I might perhaps need and use it one day. Older Bento versions provided direct access to the data in the Address Book application. The latest Bento version, however, imports the data from the Address Book application; it creates a copy of the original data and cuts the connection to it. This design change actually came to my rescue.