UI Design Blinks 2013

By Gerd Waloszek

Welcome

to this column of brief, blog-like articles about various UI design topics – inspired

by my daily work, conference visits, books, or just everyday life experiences.

Welcome

to this column of brief, blog-like articles about various UI design topics – inspired

by my daily work, conference visits, books, or just everyday life experiences.

As in a blog roll, the articles are listed in reverse chronological order.

See also the overviews of Blinks from others years: 2010, 2011, 2012.

November 22, 2013: Thank You and Good-Bye!

In October 2010, I eventually came to the conclusion that I ought to join a trend which, at the time, was no longer really new – and publish a UI design blog. I already had some experience with writing blog-like articles, because, between 2005 and 2007, I had published an internal SAP design blog. However, with hindsight, the new articles, called "SAP UI Design Blinks," were often much longer than what you would rightfully expect from a blog...

Actually, were were not the ones who referred to the blinks as a blog. I myself regarded them more as a "column" in the traditional sense. I know that the following is an oversimplification, but, for me, a typical blog is something in which people write about how they got out of bed, whom they met, what they encountered in the course of the day, and what thoughts all this inspired. Thus, it is the events of the day that drive bloggers. Of course, this was never my intention when I conceived the blinks. In the same way as in my internal articles, I wanted to report on personal encounters with hardware and software issues and, in so doing, shed some light on the complex interplay between designed artifacts and human beings with their strengths and limitations – by being a user advocate and often taking over the role of a DAU (dumbest assumable user).

With this intention in mind, I was not able to follow a strict publishing schedule for the blinks (if I didn't want to accumulate stories for later use...). Instead, I had to wait until I encountered design issues, was "kissed" by inspiration, or hit on interesting thoughts, for example, in books that I reviewed. In some cases, I also had to conduct some research or experimentation for the blink, which also took time. For me, this uncertainty was not only challenging, but it was also part of the game. In the end, I was confident that, one day, the next topic would emerge "out of the blue," but I never knew when it would happen. And sometimes, several incidents happened all at once, leading to the effect of one blink running straight into the next...

With a small tear in my eyes, I have to concede that this is definitely the final UI Design Blink that I will write for the SAP Design Guild Website. Perhaps I will feel inspired to revive the blinks in a private format some day. But, for the time being at least, I would like to take a breather and relax for a while. Not that that will prevent me from encountering a lot more design issues in the future, as experience shows...

Finally, I would like to say good-bye and once again thank all our visitors most sincerely for their trust in and loyalty toward the SAP Design Guild Website (which is no longer availabe...)!

P.S.: I decided to republish my UI Design Blinks with minor adaptions on my walodesign Website. I also republished the above-mentioned SAP-internal articles with slightly stronger adaptations on this site. For more infomation, see page walodesign Columns - Overview.

November 20, 2013: A Lesson from Colin Ware's Book Information Visualization

Information Visualization, Third Edition: Perception for Design by Colin Ware was the last book I reviewed for the SAP Design Guild Website (no longer available). In the final chapter, I hit upon a surprising figure for the capacity of our long-term memory, which inspired me to remember my roots in physics and attain a perspective based on the powers of ten. Ware points out that:

- "Using a reasonable set of assumptions, Landauer (1986) estimated that only a few hundred megabytes of information are stored over a lifetime."

For my journey across the powers of ten, I make a simplifying assumption, namely that we store 400 megabytes over a lifetime. I do not think that this assumption is critical, because I am only interested in the overall pattern. After all, a real physicist never worries about a factor of two or three...

Here is my "storage powers of ten" table with some numbers, factors, examples, and comments:

| Bytes | Factor (Brain) | Factor (Page) | Examples | Comment |

| 4 KB | 0.00001 | 1 |

Amount of RAM in my first computer; text-only book page (2,000 double-byte characters) | I use double-byte characters in this table to simplify the calculations |

| 40 KB | 0.0001 | 10 |

Storage size for a typical Web photo (600*450 pixels, low-quality JPEG compression) | This may serve as an example of a low resolution image. |

| 400 KB | 0.001 | 100 |

100 text-only book pages; text for my father's autobiography; an early floppy disk for the Apple Macintosh | My father typed his autobiography on an MSDOS computer. It uses only a thousandth of our memory's capacity. I added photos to it at a later stage. |

| 4 MB | 0.01 | 1,000 |

Storage size for a typical digital photo (10 megapixels, JPEG compression); amount of RAM in my Apple Macintosh SE30 | Compare this with our brain... |

| 400 MB | 1 | 100,000 |

Information stored in human memory over a life-time; 100 typical digital photos; 100,000 text-only book pages; a CD (approximately...) | This is the starting point for everything , the storage needed for a lifetime of experiences... |

| 4 GB | 10 | 1,000,000 |

Typical amount of RAM in a laptop computer; USB memory stick (small); SD card; 100,000,000 text-only book pages; a DVD (approximately) | |

| 400 GB | 1,000 | 100,000,000 |

100,000 typical digital photos; a typical hard disk in a laptop computer | |

| 4 TB | 10,000 | 1,000,000,000 |

Traces that we leave during our lifetime on the Internet (Marc Smith,

2006); 1,000,000 typical digital photos; a large hard disk |

Actually, when Marc Smith made this remark at a conference that I attended in Bonn, Germany, in 2006, I believed that he was underestimating the storage space needed... |

| 40 TB | 100,000 | 10,000,000,000 |

Storage space required for video life logging (Alan Dix has noted that even 70 years of high-quality video recording would require something less than 30 terabytes of storage; after O'Hara et al., 2008) | This is about how much storage space one would need for video lifelogging – 100,000 times the amount of what our memories require... |

For those who find the brain's capacity somewhat disappointing, Ware explains that "the power of human long-term memory, though, is not in its capacity but in its remarkable flexibility. The reason why human memory capacity can be so small is that most new concepts are made from existing knowledge, with minor additions, so there is little redundancy. The same information is combined in many different ways and through many different kinds of cognitive operations. ... Human long-term memory can be usefully characterized as a network of linked concepts." Hopefully, there are not too many tautological circles in our networks...

There also seems to be a purely visual memory in our brain, but since 100 digital photos require the same storage capacity as a human memory over a lifetime would do, we can conclude that the images stored in long-term memory cannot be detailed (though we can store 10,000 small Web photos). Ware points out that visual long-term memory does not have the network structure of verbal long-term memory and that "the power of images is that they rapidly evoke verbal-propositional memory traces." Thus, an image can rapidly evoke numerous remembrances, which is an experience that we all know well...

All in all, I would be able to store the life-time memories of a thousand people on my laptop's hard disk (512 GB), which is not such a pleasant thought for me... And I remember that artificial intelligence pioneer Marvin Minsky once stated that he would love to dump his brain on a CD to achieve eternal life. When I read this, I believed that a CD would never suffice for the content of a brain. Regrettably, I was not able to find his original statement when preparing this Blink, but I found an interview with him, in which he confirms that a CD (650 MB) would suffice for a brain dump. In the meantime, however, Ware had already convinced me that it could be done – at least, in terms of storage capacity...

References

- Colin Ware (2012). Information Visualization, Third Edition: Perception for Design. Morgan Kaufmann • Review

- Kieron O'Hara, Mischa M. Tuffield & Nigel Shadbolt (2008): Lifelogging: Issues of Identity and Privacy with Memories for Life

- Renato M.E. Sabbatini (1998): The mind, artificial intelligence and emotions – Interview with Marvin Minsky

October 24, 2013: A Tour de Force of Designers in the Software Realm

In various articles on the SAP Design Guild Website (no longer available), I have discussed the different kinds of design and designers. Therefore, a colleague asked me to describe them in less than 500 words for an introductory article on the SAP UX Community. However, I not only failed to comply with the 500 words limit, I also did not bear in mind that the intended target audience knows very little about design. So I withdrew the article and went back to the drawing board. For this column, however, the article seemed appropriate to me after some updates, even though I "stole" the beginning from a book review and have written about the topic before. So here is my personal view of what kinds of designers populate the software world in (fairly) short form – and please excuse some repetitions.

When I was young, there were only three design disciplines I was aware of: fashion design, product or industrial design, and graphic design. Naive as I was at the time, I understood the first to be responsible for fashionable clothes, the second for the design of cars, vacuum cleaners, Scandinavian furniture, and so on, and the third for book illustrations, stamps, and advertising. Today, there are design disciplines galore. Particularly in the software world, we find designers who specialize in, say interaction design, user interface (UI) design, user experience (UX) design, graphic/visual design, Web design, as well as design thinkers, to name just the most prominent ones. I would therefore like to shed some light on this variety and look for differences as well as commonalities.

Figure 1: Designers may feel differently and may also have a different background (image from my review of The Plenitude)

First of all, the names of the types of designers are neither exclusive, nor do they necessarily reflect everything they do. So keep in mind that my characterizations will unavoidably be gross oversimplifications.

Interaction designers (interaction design, IxD) typically design physical devices, albeit with a lot of software "under the hood" these days. Their focus is on how people "interact" with their designs. Typical applications are museum installations and prototypical devices to explore ideas with people. Some interaction designers (the research through design, or RTD, proponents) claim that this is a viable way of doing research. Often, these designers have an art school background and some of them also feel a bit like artists. Others, like the "critical designers," also feel and act as provocateurs. They often explore future scenarios and want to make people think – and to persuade them to change their behaviors (persuasion/persuasive design).

User interface designers (UI design) typically design user interfaces for software applications. Some people say, they just put controls on screens and arrange them to optimize the users' workflow. Good UI designers, however, think holistically, design the complete interaction of users with their software, and take care of the context in which it is used (they think about use cases and scenarios, and prototypical users or personas). Not surprisingly, some UI designers therefore call themselves interaction designers (think of Cooper Interaction Design, now just Cooper). UI designers often have a background in computer science or cognitive psychology – they feel more like researchers or engineers than artists.

(User) experience designers (UX design) can be regarded as a more recent breed of UI (and interaction) designers who put the user's overall experience with a system at the center of their design efforts. This, again, requires a more holistic view. A user's experience can be good or bad, and, of course, the preferred outcome is that it is good or satisfying. This approach is, of course, not limited to software; it can be applied to any man-made artifacts, to processes such as services (service design), and even to organizational structures (organizational design).

Visual/graphic designers style the visual aspects of products or software applications, be they static or, increasingly, dynamic: colors, forms, shapes, transitions, movements, and more are their realm. Many visual designers, however, do not want to be constrained to the design of visual aspects only. They also address interaction aspects and regard themselves as interaction designers. When they are, for example, involved in the design of screen controls, they are indeed doing interaction design. In contrast to UI and UX designers, most visual designers have an art-related background and feel a little bit like artists, although there are also many self-made designers in this realm.

Web designers were initially regarded as a kind of visual designers who specialize in Web pages. However, with the evolution of the Web into a dynamic medium, the boundaries between Web, UI, and visual designers get more and more blurred. Nonetheless, their technical domain is the Web and, increasingly, mobile apps are based on Web technology. Web design, too, attracted and still attracts many self-made designers...

Design thinkers, that is, proponents of the design thinking approach, encourage designers to bring their methods into the business world – by either taking part in business processes themselves or by training business people to use design methods. They maintain that "everyone is a designer," which makes some professional designers frown because people may conclude that they are no longer needed. And much like already reported for UX designers, for design thinkers there are almost no limits to applying their approach (except for some reservations on including designers in the team at all...).

So, now it is up to you to choose what kind of designer you want to be. But whatever job title you will have on your business card, in your professional life you will probably get involved in most of the topics listed above...

References

- September 1, 2012: Design Thinking, Interaction Design, and UI Design (UI Design Blink)

- Milan Guenther (2012). Intersection: How Enterprise Design Bridges the Gap between Business, Technology, and People. Morgan Kaufmann • ISBN-10: 0123884357, ISBN-13: 978-0123884350 • Review

- Rich Gold (2007). The Plenitude: Creativity, Innovation, and Making Stuff. The MIT Press • ISBN-10: 0262072890, ISBN-13: 978-0262072892 • Review

October 17, 2013: Keyboard Schizophrenia...

In my previous UI Design Blink, I mentioned that columnist John Dvorak called the integrated application Jazz "one of the great flopperoos in computing history." In this Blink, I would like to complain about what I myself find "one of the great flopperoos in computing." While it seems to be only a "minor" and peripheral issue, it nevertheless annoys me nearly every day...

The Background Story

Long ago, maybe in 1984, when the Macintosh entered the market, but maybe even earlier with the arrival of the Lisa, Apple decided to deviate from the standard computer keyboard and introduced the "Command" ("Cmd") and "Option" keys as substitutes for the "Control" ("Ctrl") and "alt" keys. (This probably "inspired" Microsoft years later to add the "Windows" key to their keyboards…) For many years, this "feature" did not bother me at all because I owned a Mac and used it exclusively.

Later, and for reasons unknown to me, Apple added a "ctrl" key to its keyboards and also an "alt" label to the "option" key (probably for design reasons, they labeled both in lower case; the "Cmd" key one day also got a lower case "cmd" label...). The presence of Apple's "ctrl" key remained a mystery to me for a long time. Apart from its use in some early and slow Windows emulators, it seemed to be useless on the Mac. However, when context menus became popular on the Windows platform, which were evoked with the right mouse button, Apple finally found a use for it: Since an Apple mouse has one button only, you press the "ctrl" key together with the mouse button to invoke the context menu. At some point in time, however, I decided to buy a non-Apple mouse with two buttons – and the need for the "ctrl" key workaround vanished for me.

|

|

Figure: Sections of Windows (left) and Apple (right) keyboards showing the differences in modifier keys and layout

Using Two Systems Alternatively or Even in Parallel

In the early 1990s, I found a job at SAP and had to switch to Windows computers at work. All of a sudden, an interaction element, which was targeted at proficient users and the use of which I had highly automated over the years, turned into a disaster for me: keyboard shortcuts. Whenever I switched between the computers at work and at home, I had to keep in mind – and in my hands or, more precisely, in my automatic motor programs – that I needed to use different modifier keys. For example, at work, I had to press "Ctrl-C" to copy something, and at home "Cmd-C" – and analogously for all the other keyboard shortcuts... Moreover, most of the modifier keys had different locations on the keyboards. It took me a long time to get back to speed and use keyboard shortcuts without errors.

Matters got even worse when Apple switched to Intel CPUs. Thanks to this move, using Windows on a Mac became feasible, using either Apple's Boot Camp solution or a virtual machine such as Parallels Desktop. With the latter, I am able to run both operating systems in parallel on my MacBook (I run Windows typically in a window) – and both use the Apple laptop keyboard. In my work, I often switch between both platforms, for example, to copy something from a Mac application and paste it into a Windows application. This required me to learn "useful" shortcut sequences, such as "Cmd-C + Ctrl-V" for a simple cut-and-paste operation. Actually, these keyboard and memory acrobatics drive me almost crazy. From time to time, I press the "Cmd" key when using Windows – and, to my dismay, the Windows 8 home screen appears and hides the desktop (it seems to emulate the "Windows" key...). I would be able to see the funny side were not me who was affected. And I wonder for how many more years I will need to cope with this absurdity.

More Issues...

But there are more keyboard-related issues, for example, the "Delete" key topic. On the Mac, you press the "Backspace" key to delete unwanted characters in a "backward" fashion. In Windows, you typically use the "Delete" key to delete them, albeit in a "forward" fashion. A backward delete using backspace may also be possible in some Windows applications, but does not seem to be popular. For deleting more complex entities, however, you have to press a "Cmd-Backspace" key combination on the Mac, whereas in Windows the "Delete" key alone does this job. Things became even more confusing when I started to connect my Mac to the SAP company network using a Citrix client, working within a Windows environment there. In this context, the "Delete" key is emulated by an "Fn-Backspace" key combination on my Mac. There may be logic behind all this, but it does not appear user-friendly to me...

Final Comments...

All of these examples share one common theme: The keyboard-related inconsistencies between operating systems (or working environments) prevent – for people who use more than one system – keyboard shortcuts from becoming what they are intended to be: fast routes for proficient users. These users have at least a hard time to work efficiently under such conditions. In other words: small cause, big impact.

I am unsure as to whether this "compatibility issue" is on anyone's radar at all – nobody seems to complain about it except me. But this cannot be true given the many "switchers" and people in a situation like me: different computers at home and work, different platforms on one computer.

Finally, think of the poor people who have to write computer documentation. A number of applications can be used in nearly identical form on both platforms. Most of the technical writers seem to feel that they have to document the keyboard shortcuts for both platforms – what a waste of time and effort (and documentation space). The same is true for platform-independent browser-based applications.

Admittedly, I would like to give Steve Jobs, or whoever was responsible for the decision to modify the Apple keyboard, a good shaking. Probably, pure marketing considerations were the reason for this decision (or was it arrogance?). But regrettably, Steve no longer is with us...

October 8, 2013: Milking the Wooly Sow for Eggs

We Germans have a name for something that "does everything": We call it an eierlegende Wollmilchsau. That's an egg-laying, milk-giving, wool-bearing sow. Such a sow would, of course, simplify a farmer's life considerably. This is also the idea behind universal tools such as the Swiss army knife, food processors, and other "all-in-one solutions." By the way, in this article I will use the acronym "ElWoMS" to refer to this concept.

Figure 1: My interpretation of the eierlegende Wollmilchsau (ElWoMS) using an image from an old biology textbook

Naturally, the "all-purpose" concept also spread to the software world, for example, in the form of so-called "integrated applications." The short-lived Jazz office suite – columnist John Dvorak called it "one of the great flopperoos in computing history" – was an early instance of this on the Apple Macintosh. Microsoft Office is another one and is still "the reference" for integrated applications, although – in contrast to Jazz – it consists of a bundle of separate applications. One might also regard a single application like Microsoft Word as an "ElWoMS" – albeit one with a smaller scope – because Word attempts to be "everybody's darling" by offering functionality for all the potential uses that might emerge in the realm of text processing. And, last but not least, the SAP Business Suite is also a highly integrated system, this time on a much larger scale, offering an extensive range of business functionality within one coherent framework.

Integration has a lot of advantages, but it also has severe drawbacks. People bemoan, among others, the complexity of all-embracing applications and application suites and criticize that essential, or the just needed, functionality is buried within huge menu structures and therefore hard or even impossible to find. Quite often, people don't even know what functions are available. Therefore and quite naturally, a counter-movement emerged: simple applications that serve just one purpose. For example, extremely simple applications that allow you to compose text without any distractions such as formatting. On the other hand, they lack more advanced functionality and are thus limited in scope. The advent of mobile devices and their limited resources gave a strong boost to this approach: We entered the "age of the apps." Today, we have "apps" galore, many of them serving a single purpose or only a few purposes, and we can buy them online in app stores for just a few dollars or euros.

In her book The Mobile Frontier, Rachel Hinman declares "In mobile UX, applications are the star." And indeed, instead of zillions of menu commands in one application or application suite, we now have zillions, or at least tens to hundreds, of apps installed on our mobile devices – be they phones, tablets, or, in the near future, "intelligent" clocks and glasses. On my iPad, I have already accumulated four home screens with a total of 72 apps – and this number will definitely grow. Do I know on which screen I can find a certain app's icon to start the app? Of course not. I have to browse the home screens to spot the icon of the app that I need at the moment*. Are we just replacing one source of complexity with another?

For a while, I believed that documents are the important entities on my computer. They are the things that persist over the years, irrespective of computers, operating systems, and application versions (provided that there is an app that still can read them, which is another sad story...). I regarded applications just as the "tools" that I needed to manipulate them, but times – and opinions – seem to have changed. Considering what Hinman writes, I feel that, in the "brave new app world" of mobile computing, things have become even worse:

- "Because many mobile interactions are inherently ephemeral and have no output – no file to save and store – the application becomes a natural set point for the interaction. Files that are created as output on a mobile device, such as pictures taken with a camera phone, are usually saved in either the application itself or a 'space' strongly associated with the application (such as a gallery), reinforcing that the application is the set point of the experience, not the file."

Actually, these "jealous" apps drive me and many other mobile users crazy. Burying documents within apps makes it hard or even impossible to exchange them between apps. Consequently, tool apps flourish that promise to perform such transfers easily – and lead to even more apps that you have to care for.

Conclusion

Complexity will be the death of ElWoMSs, while the rise of mobile computing is bringing on a boom in small, single-purpose apps. But I doubt that, in the long run, this change will really take us any nearer "ease of use" (or whatever we call the idea that software simplifies our lives instead of making it more complex), particularly when we perform more complex and demanding tasks on our mobile devices. But I may well be wrong with my prediction – only the future will tell...

I am also worried about the "sustainability" of documents that are tied to potentially short-lived apps. My experience on desktop computers in this respect is not encouraging. There is no reason to expect any e better from mobile apps. Hinman does not seem to worry much about that because she focuses on mobile interactions that "are inherently ephemeral and have no output." Sadly, that's only half the story.

Comments

*) Of course, I could sort the apps into groups that make more sense to me, but this is cumbersome on the iPad. It seems to be easier using iTunes, but till I started writing this article, I was too lazy**. Anyway, I am skeptical whether such an arrangement would last for long. On my Macintosh and Windows computers, the computer from time to time "helpfully" rearranges icons for me that I had laboriously arranged my own way on the desktop. It always takes me an awful lot of time to restore my own icon arrangement. So I hesitate to do any icon arrangement work on my iPad's home screens.

**) While writing this article, out of curiosity I made a first attempt at organizing my iPad apps using iTunes. It took me some time, but the effort was not excessive. (It would be for hundreds or thousands of apps...). I even discovered by accident that you can organize apps in folders. Now I am waiting to see how long my arrangement of app icons on five home screens (that’s one more than before) will last. It did survive the update from iOS 6 to iOS 7...

References

- Rachel Hinman (2012). The Mobile Frontier: A Guide to Creating Mobile Experiences. Rosenfeld Media • ISBN: 1-933820-55-1 (Paperback), ISBN: 1-933820-05-5 (Digital editions) • Description at Rosenfeld Media • Short presentation • Review

October 2, 2013: Making Life "Easier" on Vacations?

We are constantly being told that technical devices make our lives easier and more pleasant. We therefore accumulate quite a bunch of them during our lifetime. As vacations are an important part of our lives, not surprisingly, some of our devices come with us. In this UI Design Blink, I will not only reveal, which devices my wife and I took with us on our recent vacation, I will also discuss how these complied with the notion of "making life easier."

A Deceptively Small Collection

Apart from our two mobile phones, which I will not discuss here, our device collection for our vacation seemed to be fairly small: It comprised two cameras, one for each of us, and an iPad, which we wanted to use to store and preview the many photos that we would take, to store my wife's voice annotations, assuming she really would use her voice recorder (see Fitting a Device to Usage Habits – A Usability Lesson), to listen to music, and to stay connected with the rest of the world via e-mail and the Internet. The Internet was very important for us, as we wanted an up-to-date weather forecast (from three weather Websites so that we could pick the weather that suited us best...). But while these devices definitely made our vacation more pleasant, there was also a dark side to them – in fact, more than one dark side. In the following, I will shed some light on this "darkness."

The "Dark Sides"

The "darkness" refers to the sad fact that all three devices I mentioned above require that you take additional devices with you (by the way, the same is true for the mobile phones).

Firstly, you need extra devices to take full advantage of the iPad's capabilities: The iPad does not have a – for us – good enough loudspeaker for listening to music. So I took a small loudspeaker that I can connect to it. Thanks to Apple's "higher insights," the iPad does not allow you to connect USB devices other than cameras or SD cards to store data on it. So I purchased a storage device called iUSBPort that allows me to transfer my wife's voice annotations from her voice recorder to the iPad. Finally, if, like me, you did not buy the cellular version of the iPad, you also need a device to connect the iPad to the Internet, at least if you stay at a simple campsite where there is no WiFi. So I also took a MiFi device with me. All in all, instead of one device, I actually had four...

Figure 1: Plugs for connecting some of my devices with a USB charger

But there is more of the "dark": As Alan Cooper liked to point out already years ago, today's cameras are computers and need a battery to operate. Actually, apart from the voice recorder, all of the devices that I have mentioned so far have a built-in or extra battery that needs to be recharged. Four of my devices could, at least in theory, use the same USB charger, but each of them has a different cable and plug that connects to it (see Figure 1). This meant that we had to add a number of battery chargers to our luggage. For example, each camera uses a different battery, and because these batteries are not charged within the cameras – something some newer cameras do – every camera requires its own charger. Since these chargers need 220V, I also had to take a 12V to 220V converter with me. So we arrived at a total of five more devices and a number of cables for charging purposes (see the table below).

Finally, from a usability and "making life easier" point of view, the real bummer is that there were not even two devices that use the same conventions for charging the battery. To get an overview of the situation, I created the table below (to which I added my older camera, which nearly joined us, but was replaced with a newer "toy"):

| Device | Charging | Fully Charged | Low Battery (Incomplete) | Charger | Plug | Used? |

| iUSBPort (Storage) | Orange LED | Green LED | Shown on the LCD display | USB charger (not included with device) | Plug used for power supplies (rightmost in Figure 1) | No |

| Huawei MiFi E5331 | Blinking green battery symbol | Steady green battery symbol | Red battery symbol | USB charger* | Special plug, similar to mini USB (second from left in Figure 1) | Yes |

| x-mini loudspeaker | Red LED | Blue LED | Blue LED dims | USB charger (not included with device) | USB mini plug (third from left in Figure 1) | No |

| Ricoh GXR camera | Green LED | LED off | Shown on camera display (green battery symbol with three steps, turns to orange) | Special 220V charger plus 12V to 220V converter | 220V plug | No (not included) |

| Ricoh CX 4 camera | Orange LED | LED off | Shown on camera display (green battery symbol with three steps, turns to orange) | Special 220V charger plus 12V to 220V converter* | 220V plug | Yes |

| Leica X Vario camera | Red LED | Green LED | Shown on camera display (green battery symbol with three steps, turns to red and blinks) | Special 220V charger plus 12V to 220V converter* | 220V plug | Yes |

| iPad | Shown on iPad display (green progress bar in battery symbol, % value) | Ditto (progress bar is white when not charging; 100 %) | Shown on iPad display (progress bar is white in battery symbol when not charging; % value) | USB charger* | Special plug (Apple Lightning; leftmost in Figure 1) | Yes |

*) Included chargers etc.

The table above reveals that some of the chargers and devices indicate the state of the charging process using an LED. When the battery is charging, they glow in green, red, or orange. When the battery is full, the LED may turn to green, blue, or "off." A low battery is indicated in many different ways. Yes, there are two devices in the table that use an orange LED, but in one case, the LED turns to green when the battery is fully loaded, and in the other to "off" (which some other LEDs do as well).

My Personal Lament...

Who is not confused by all this variety? Who always knows when a battery has finished charging, or how much power it still has? Well, I do, but it took me a lot of effort to learn. It would probably have been a good idea to print the table and have it ready when one of the devices required recharging. Making life easier? That's laughable! Not at all! Why can't manufacturers agree on a standard for charging batteries and displaying their power level? After all, humans agreed – admittedly, more or less – to drive on the right, and thanks to this agreement we survive in the traffic jungle... I would have survived my vacation in much better shape if battery charging had been easier – and also if I had needed fewer devices to satisfy my needs.

I have just finished reading Dan Saffer's new book Microinteractions. It inspires me to frame displaying battery power level and charging progress as microinteractions. Obviously, the designers of the devices mentioned in this article conceived these as "signature moments," that is, as product differentiators and not as something that needs to be consistent and standardized...

P. S.: I would like to point out that, except for the addition of a camera to the table above (which might have been included in my vacation list), I have not made up this example – it is the simple truth (and, as indicated, the list is not even complete). I am also proud to point out that we do all the charging from our car battery: we do not use extra electricity.

References

- Dan Saffer (2013). Microinteractions – Designing with Details. O'Reilly Media • ISBN-10: 144934268X, ISBN-13: 978-1449342685 • Short presentation • Review

September 11, 2013: With a Smile on His (or Her) Face…

In

the last few months, I've often had to take the bus instead of ride my

bicycle when commuting to work. During that time, I observed a lot of people

using their mobile or smart phones. I noticed again and again that people

started to smile when they picked up their phones, and while they talked

with their friends, relatives, or loved ones. Of course, I also observed

a number of incidents in which people were not friendly at all when talking

on their mobile phones. But as a general rule, I can state that the smiles

won hands-down.

In

the last few months, I've often had to take the bus instead of ride my

bicycle when commuting to work. During that time, I observed a lot of people

using their mobile or smart phones. I noticed again and again that people

started to smile when they picked up their phones, and while they talked

with their friends, relatives, or loved ones. Of course, I also observed

a number of incidents in which people were not friendly at all when talking

on their mobile phones. But as a general rule, I can state that the smiles

won hands-down.

This smile is not restricted to the use of phones, of course. The same things happens, for example, when my wife gets her camera ready to take a picture of me. After some fiddling around with her camera, there is a certain moment when she starts to smile. Over the years, I have learned that this is precisely the moment when I should smile as well, so that she can take a nice photo. But I think that this smile also expresses her enjoyment in taking a photo of me.

There are many more stories telling how technical devices, or products, make us smile when we use them. The advertising industry has been well aware of this phenomenon for a long time now, of course. There is not one advertisement, in which people are not smiling – well, OK, except maybe for the ads that are meant to intimidate us because we do not use a certain toothpaste or eat a certain company's health food… But this omnipresent smile is not what I want to point to here. The advertising industry has also learned to use more subtle marketing cues because these seem to be much more effective. Here is an example: Years ago, when my wife and I visited the Volkswagen Autostadt in Wolfsburg, Germany, we watched a video promoting the new Skoda Superb. It showed a man driving the car through Prague, who gradually started smiling, apparently because he really seemed to enjoy driving it. At least this was what the video wanted to convey to its audience…

The Superb example differs from the previous ones in that no other people are involved. Thus, the mere use of a technical device can make us smile, but obviously we smile even more if other people are involved – after all, we humans are a social species. The Superb video tries to suggest that using a certain product makes people smile. I am somewhat skeptical of this message although it might hold true in the case of "designer products" (which people are proud of to own). I believe that most mobile phone users don't actually care whether they use a Samsung, Nokia, or Apple phone. Contacting other people with ease – or just thinking of the person they are going to contact – makes them smile. The lesson for designers that I draw from all this is: If people smile using a product, the technology does not interfere with their needs and therefore plays a subordinate role. So the designers of such products can rest assured that they did their job well. They probably didn't attract people to that particular brand or product as such – they just made it easy for people to satisfy their needs.

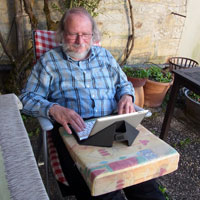

|

|

|

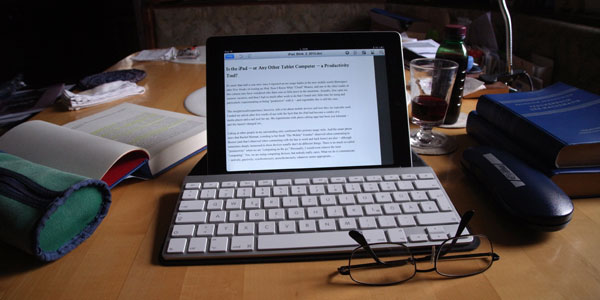

||

Figure 1-3: Figures revisited – A slight smile can be observed in front of an iPad…(click images for larger versions) |

||||

By the way, I see very few people smile in front of their desktop or laptop computers. Are we UI and UX designers doing something wrong?

August 30, 2013: Swinging Between Designing the Big and the Small Things

In this UI Design Blink, I fit various puzzle pieces together to produce an Aha! experience and the insight that I "knew it all along"... I'm talking – in design terms – about tackling "big problems" versus devoting one's attention to the "small ones" which were only recently dubbed "microinteractions."

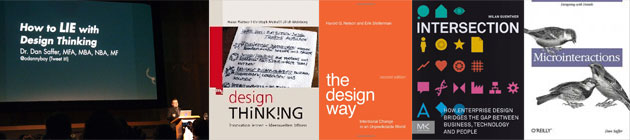

Puzzle Piece #1: Dan Saffer and Design Thinking

At the Interaction 2012 conference in Dublin, Ireland, I attended Dan Saffer's 10-minute presentation (or should I say "performance"?) "How to Lie with Design Thinking" (see references below). After his presentation, I was very clear on one point at least: Dan Saffer does not like Design Thinking. At SAP, this direction – driven by the d.schools in Stanford and Potsdam – has attracted a great deal of attention and gained momentum. While I am not involved in any Design Thinking activities, I wrote an article about it on this Website to become more familiar with its much- heralded approach. But I have to admit that the spark has not yet jumped over and that I still observe Design Thinking from a distance. Promoters of this direction point out that Design Thinking brings the design approach into the business world. Thus, they "think big" and apply design methods to areas beyond its classic confines, thereby turning everyone into a designer.

Figure 1: Some of the inspirational sources for this UI Design Blink

Puzzle Piece #2: Thinking Big – The Role of Design in Society and Designing "Big Systems"

I have encountered two more instances in which, in my opinion, designers use the term "design thinking" but, in my opinion, not to mean the Design Thinking direction explicitly, but more generally to mean "thinking like a designer." In practice, this may not be too far away from what Design Thinking proponents have in mind... My first encounter of this kind happened when I reviewed Harold G. Nelson's and Erik Stolterman's book, The Design Way: Intentional Change in an Unpredictable World. This book advocates establishing design as a human tradition in its own right, on a par with other traditions such as arts and science. Since the authors highlight the role of design in society, I would hold that they too "think big."

Reviewing Milan Guenther's book, Intersection: How Enterprise Design Bridges the Gap between Business, Technology, and People, entailed another encounter with the trend of designers to "think big." Guenther's book addresses the redesign of enterprises, but its framework can be applied to any "big problem." Both encounters helped me broaden my horizon and my view of design, but they also made me feel somewhat uneasy in my role as a (UI) designer. Is "thinking big" really what I want to do as a designer?" I asked myself.

Puzzle Piece #3: Dan Saffer's Microinteractions

Once again, my inspiration stems from a book that I am currently reading for review: Dan Saffer's new and highly acclaimed Microinteractions: Designing with Details. At the time of writing this article, I have only read the first 20% of the book (in an e-book pages don't tell you much...), but I think that what I have read already suffices for a provisional fixing of my position as a designer.

In the preface to his book, Saffer explains why he decided to write a book about microinteractions:

"Over the last decade, designers have been encouraged to think big, to solve "wicked problems," to use "design thinking" to tackle massive, systemic issues in business and in government. No problem is too large to not apply the tools of design to, and design engagements can involve everything from organizational restructuring to urban planning. The results of this refocusing of design efforts are unclear. But by working at such a macro scale, an important part of design is often lost: the details that delight."

These words really struck a chord with me. Saffer summarizes my – and perhaps the overall – situation perfectly. It is not the "big picture" that attracts me as a designer: It is the details.

And now to this Column...

In his foreword to Saffer's book, Don Norman points out that designing satisfying microinteractions requires "great observational skills: watching people interact, watching yourself interact, identifying the pain points, ..., and then determining which things make sense to bring together." In my case, being more a writer than a practicing designer, it is often the second activity, watching myself interact and stumble, that provides inspiration for me. Sometimes, it is also my wife, my friends, or my colleagues. And as soon as I make such an observation, a new UI Design Blink is born, telling a story about the difficulties that humans have with technology, about people's weaknesses, and also about instances of mindless design, mostly at the level of microinteractions...

References

- Dan Saffer (2013). Microinteractions – Designing with Details. O'Reilly Media • ISBN-10: 144934268X, ISBN-13: 978-1449342685 • Short presentation • Review

- Hasso Plattner, Christoph Meinel & Ulrich Weinberg (2009). Design Thinking. mi-Wirtschaftsbuch • ISBN-10: 3868800131, ISBN-13: 978-3868800135 • Review

- Milan Guenther (2012). Intersection: How Enterprise Design Bridges the Gap between Business, Technology, and People. Morgan Kaufmann • ISBN-10: 0123884357, ISBN-13: 978-0123884350 • Review

- Harold G. Nelson & Erik Stolterman (2012). The Design Way: Intentional Change in an Unpredictable World (2nd Ed.). The MIT Press • ISBN-10: 0262018179, ISBN-13: 978-0262018173 • Review

- Dan Saffer (2012): How to Lie With Design Thinking on Vimeo (video)

August 6, 2013: Coffee Machines, the Burdens of Experience, Resulting Mental Models, and a Lack of Attention – and the Role of Design

Recently, my team moved to a new building, meaning that we not only were confronted with a new environment, but also with a new coffee machine. Actually, the new machine is the same model as the ones I am used to. But, as always and, as my story shows, the devil is in the details (see Figures 1-3 for the coffee machines).

|

|

|

||

| The coffee machine on my level in the old building | The coffee machine one level lower that brews decaffeinated coffee (old building) | The coffee machine on my level in the new building |

Figures 1-3: The coffee machines that play a role in this UI Design Blink

Introduction and Story

Figure 4 shows an example coffee machine control panel. Labels indicate the available coffee specialties and the associated buttons allow you to brew them – everything is neatly arranged in rows. Let us ignore the LCD panel at the top of the control panel, which shows a simulation of the brewing progress – you can watch the real process, though, if you look at the coffee mug itself... As I usually do not wear glasses, I therefore cannot read what the panel also wants to tell me, anyway. All in all, the control panel is straightforward. I should also mention that the machine in Figure 1 brews regular coffee, while the machine in Figure 2 brews decaffeinated coffee, indicated by a label at the top left (see Figure 2 above and Figure 6 below for details). Therefore, over the years, I built up the expectation (or mental model, for the pros...) that a coffee machine can brew only regular or decaffeinated coffee, but not both. Now to my story: On my first day in the new building, I went to the, for me, new coffee machine on my level and found a white label stating "Decaffeinated" (see Figures 5 and 7 below). "Oh", I said to myself, "I have to go to another level for regular coffee, what a nuisance!" And so I did. The next day, when my colleague returned to the office after a short vacation, I made him aware that there is only decaffeinated coffee available on our level. Much later, we talked about this nuisance again, and he told me that there was not at all a problem with regular coffee on our level. |

Figure 4: Coffee machine control panel |

A little confused, I went to the coffee machine to investigate how I had arrived at my – wrong – conclusion. There were indeed four buttons for regular coffee specialties. The fifth, and bottom, button was, however, reserved for decaffeinated coffee. The respective label was inverted and thus highlighted so that people would not select decaffeinated coffee by mistake. I found that there was nothing really wrong with the control panel, perhaps apart from the fact that there was just one unspecified type of decaffeinated coffee so that, at the beginning, you do not know what kind of drink you will get. It seems, by the way, to be café crème...

Analysis

Now let me analyze my misunderstanding:

- Expectations (mental model): Based on my past experience, I expected coffee machines to provide either regular or decaffeinated coffee but not both. Therefore, whenever I find a label "Decaffeinated" attached to a coffee machine, wherever it may be, I immediately assume that the machine delivers only decaffeinated coffee.

- Lack of attention and care: I spotted the "Decaffeinated" label, drew my quick – and wrong – conclusion, went away, and even spread the "message." A more careful look at the machine would have revealed that I was wrong.

By the way, at the top of the coffee machines there are two storage containers for coffee beans (see Figures 1-3). When I analyzed how I arrived at my wrong conclusion, this fact made me wonder whether these machines can really brew both types of coffee. However, as far as I can see, two containers are used for reasons that are unrelated to the regular/decaffeinated question (the small container on the top left of the new machine seems to be dedicated to ground decaffeinated coffee).

|

|

Figures 5-6: "Decaffeinated" label on the machine from Figure 3 in my new location (detail; left); label stating that the machine from Figure 2 delivers only decaffeinated coffee (right)

Figure 7: Detail of Figure 5 – label "Entkoffeiniert" ("Decaffeinated")

There is a famous saying by designers that "there are no user errors, only designer errors." But after analyzing my behavior I was inclined to question this statement. What can a poor designer do if users are as rigid and inattentive as I was? Isn't this design fully adequate, considering that the label "Decaffeinated" is next to a button as it is for all the other coffee specialties and not in a "global" location as I was used to (see Figures 5, 6 , and 7)? However, when I discussed the current design with my colleague, he pointed me to a number of inconsistencies in it, and we came to the conclusion: The "poor" designer of this control panel could indeed have done better. Here are a few reasons why:

- The labeling is inconsistent: For regular coffee the label indicates the respective specialty, for decaffeinated coffee it only says that it is just that but not what kind of drink it is.

- The "Decaffeinated" label is inverted and thus looks different from the labels for coffee specialties. One might therefore assume that it points to something different. On the other hand, it is in the position of a label indicating a coffee specialty. One might call this argument "hair-splitting", but all this considered together makes it a little confusing.

Suggestion for Improvement and Take-Away

Given that my colleague and I discovered some inconsistencies in the design of the coffee machine's control panel, how could the panel be improved? Since I do not want to put the coffee machine maker's designer out of a job, here is just a quick-and-dirty proposal that, at least, is more consistent:

Figure 8: One of many possible ways to make the coffee machine labeling more consistent and to avoid confusion ("Ohne Koffein" = decaffeinated coffee) (click the image for seeing the whole control panel)

From a health point of view, the regular coffee should probably be flagged red. From an SAP employee point of view, however, it should be the way I proposed it – and my colleagues may be right because, according to more recent research, caffeine may not be as bad for us as we have been told in the past... And as a quick take-away from this story we, once again, find that – just as Dan Saffer explains in his new book Microinteractions – small details do matter.

References

- Dan Saffer (2013). Microinteractions – Designing with Details. O'Reilly Media • ISBN-10: 144934268X, ISBN-13: 978-1449342685 • Short presentation • Review

August 1, 2013: Suggestions for Expanding Don Norman's New Version of His Book The Design of Everyday Things

Just recently, a colleague sent me an e-mail to point me to a new version of Don Norman's all-time classic book The Design of Everyday Things and also to a new training course developed by Udacity that is based on the book. When I followed the link that he had sent me, I found out that the book is now entitled, The Design of Everyday Things: Revised and Expanded Edition, and that it will be published at the beginning of November this year – I thought that it had already been published. The Udacity course will be available in fall as well. So there is still some time left to speculate about how Norman will revise and expand his book. And where better to do this than in a UI Design Blink?

In his seminal book, Norman discusses design issues for a bunch of everyday things, such as switches and switchboards, doors, faucets, ovens, thermostats, but it appears to me that he does not deal with traffic signs and lights. Taking a closer look at this omnipresent aspect of our everyday life might present us some opportunities for expanding Norman's book. And why not combine this with another ubiquitous aspect of our lives: advertising. This idea came to my mind when I was browsing the leaflet of a popular German discount supermarket. Figures 1 to 4 show a small selection of snippets from the leaflet that inspired me:

|

|

|

Only for a short time |

37% cheaper |

|

|

|

|

33% more content |

You save 40% |

Figure 1-4: Advertising elements that inspired most of my design proposals

Soon, a few initial ideas of how advertising techniques might make traffic signs and traffic lights more persuasive and thus more "usable" emerged. I present them below to the UI Design Blinks readers.

Green Traffic Lights

At road junctions, you typically have to wait for the traffic light to turn to green. When it eventually does, the driver in front of you, instead of dashing across the junction to allow as many other drivers as possible to pass it, often does not set off or hesitates before doing so. It seems as if a lot of drivers need a gentle reminder that time is limited after the traffic light has turned to green. What about a sign next to the green light that turns on together with it and that starts to flash when there are only a few seconds left? Here is a simple prototype based on Figure 1 demonstrating my proposal:

Figure 5: Traffic light that makes it clear to drivers that the green light will not be on forever

Deviations

Nobody likes detours, because they often force you to take longer route on small and slow roads. On the other hand, it may take you to places you would otherwise never have discovered. There are many ways of turning a detour into a great experience. Drivers might, for example, stop at a small cafe and have a cozy break. But how can we make detours more attractive to drivers so that they do not feel compelled to try out whether they can somehow pass the blocked road (and have to drive all the way back because it is indeed blocked)? Here is one proposal on how we could make a detour more attractive: The construction team might announce it as a special offer:

Figure 6: Announcing a detour (Umleitung) as a special offer

Start and End of Speed Limit Zones

Drivers hate speed limit zones for a number of reasons, one of them being that they often come with radar traps. However, if we presented the speed limit as a special offer (Save up to 60%!), and the end of the zone as a bonus (60% more speed!), who would not be willing to obey the traffic signs?

|

|

|

Savings of up to 60% – an offer you wouldn't want to refuse (after Figure 4)... |

... in order to gratefully welcome the speed increase at the end of the speed limit zone (after Figure 3) |

Figures 7-8: Additional advertising elements to persuade drivers to stick to the speed limit and showing what they will gain afterwards

City limits are also a great opportunity for addressing drivers in persuasive ways:

|

|

|

Reducing speed when entering a city looks like a real bargain... |

.... as does increasing speed when leaving a city (after Figure 2) |

Figure 9-10: Similar advertising elements can also be used at city limits.

Conclusions

I firmly believe that Donald Norman has his own plans on how he will expand his classic book The Design of Everyday Things. But perhaps our ideas will coincide, who knows. And perhaps our readers also have some ideas of their own to add...

References

- Donald A. Norman (November 2013). The Design of Everyday Things: Revised and Expanded Edition. Basic Books • ISBN-10: 0465050654, ISBN-13: 978-0465050659 (Paperback)

- Blog post on upcoming Udacity course based on the book

- Donald A. Norman (2002). The Design of Everyday Things. Basic Books • ISBN: 0465067107 (Paperback) • Review

July 17, 2013: A Journey Across Touchpoints at Heidelberg Main Station

Several years ago, I attended a presentation at the World Usability event in Stuttgart, Germany, in which designers reported on the redesigned ticket machines of Deutsche Bahn (DB; German Railway). This UI Design Blink is about these machines. But instead of referring much to their – hopefully now improved – usability, I would like to tell you a story that Milan Guenther would probably characterize as a "journey across touchpoints." I encountered this notion for the first time, while reading Guenther's book Intersection, in which he writes about touchpoint orchestration, a new design discipline. My personal journey was initiated by the simple task of buying a train ticket and consisted of quite a few technical and human touchpoints. As always, it also involved a number of human and technical weaknesses. Here it goes:

Recently, I made a short trip to Heidelberg to buy myself a new "toy." My touchpoint journey that I want to disclose here started with entering Heidelberg Main Station to return to Walldorf by suburban train (S-Bahn). I needed a ticket for the trip back, so I looked around for a ticket machine. I found two machines standing side-by-side, but both were occupied. I waited behind a young couple who needed quite some time to fish out all their change from the machine. Then it was my turn! I had some difficulties entering my destination station – not because of bad machine usability, but because I was too lazy to get my glasses out of my backpack. And because I did not always press the touchscreen keys hard enough. Without glasses, I was not able to spot the backspace key to correct my incomplete input, with the result that the machine suggested and selected unsuitable locations. After three attempts, I was finally ready to buy my train ticket. I inserted a €20 bill into the machine, but the machine spit it out. The bill was a little bit crumpled, so I smoothed it out and tried it once more – again, without success. I had a second €20 bill in my wallet, so I tried that one, too – in vain. Finally, I took a closer look at the machine and, to my dismay, realized that it accepted only €5 and €10 bills, which I did not have. "Damn, how can I get such bills here?" I cursed to myself and looked desperately for a change machine. I could not see one, but there were two old men in a small office whom I asked for assistance. They told me that there weren't any change machines in the whole railway station and suggested I go to the bank located in the station and ask them to give me change for the €20 bill. As I left, they further suggested I visit the DB travel center and ask for help there, which I felt was the better idea.

|

|

Figures 1-2: DB ticket machine at the Wiesloch-Walldorf train station (left) with bank machine located near-by (right) (Photos taken with my new "toy")

When I entered the travel center, I was a little bit confused: There were lots of counters and lots of people waiting to be served, but when and how could I be served? Did I have to line up, or what did they expect me to do? Luckily, I found out pretty quickly that I had to pull a number ticket. The number ticket machine had a large touchscreen with a huge button for requesting a ticket. That was easy enough, even without glasses, but probably complete overkill from a technical point of view. Anyway, my number was a lot higher than the one currently being served, so I walked around the travel center a bit, found a few more ticket machines, and finally observed an older employee helping a blind person access a counter. The employee soon returned and asked if anyone else needed help. I asked if it was possible to change a €20 bill in the travel center. He took the bill, went to a counter, and soon returned with two €10 bills. I was relieved, thanked him, and rushed to one of the ticket machines I had discovered while walking around the travel center.

Everything went like clockwork at the ticket machine now – I didn't even need to put my glasses on. However, when I inserted one of the €10 bills to pay for the ticket, the machine did not accept it and returned it immediately. After a second futile attempt, I repeated these steps with my second €10 bill – again, no luck. At this point, another older employee approached me and asked me whether he should try inserting the bill for me. He appeared trustworthy and I figured he probably wouldn't be able to run away with the bill quickly, so I agreed. And voilà! This time the machine accepted the bill, and I got my ticket and some change. I asked the man whether he had a certain technique for inserting the bill. With a smile, he answered, "Maschinen sind auch nur Menschen!" (machines are just human beings too). I thanked him and rushed to the departure platform. But thanks to all the technical delays, or touchpoints, to be more precise, I was two minutes late and the train had already left (go figure: on my trip to Heidelberg, the train was more than 5 minutes late; but now, as luck would have it, it left on time). Luckily, the next train departed only ten minutes later. So there was no reason to be disappointed – and I had learned another lesson as well.

Moral of the Story...

Years ago, I would have lined up at a counter to buy a train ticket. This would probably have involved some waiting, but only one – human – touchpoint. I would have preferred this over my recent "experience," which featured a total of six touchpoints – three machine-based and three human ones although a refined analysis would probably reveal even more. I am certain that no good designer thinking about how people buy train tickets would have orchestrated something like this. Shouldn't designers have to deal with all the complexities of life before they can rightfully state that they maintain a holistic view? Nevertheless, I assume that DB had at least a hunch this would happen. Otherwise they would not employ those helpful employees in their travel centers.

References

Milan Guenther (2012). Intersection: How Enterprise Design Bridges the Gap between Business, Technology, and People. Morgan Kaufmann • ISBN-10: 0123884357, ISBN-13: 978-0123884350 • Review

July 10, 2013: iPad Dog Salon

By Nina Hollender, SAP AG

When you Google the words "iPad" and "children" plus "coloring" on the Internet, the first hits you get are Web sites discussing "young children's addiction to the iPad" and "the best drawing apps for kids." Has the iPad led to children only wanting to draw and color in the digital world?

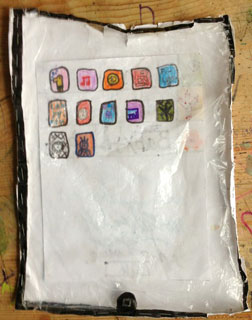

I'd like to tell you a (true) story that shows how an iPad can, in fact, inspire kids to draw and color the traditional way, on paper: My eight-year-old niece Josi, who doesn't have a real iPad at her diposal as much as desired, decided one day to create her own iPad and dog salon app with the help of paper, scissors, coloring pencils, and a few other utensils. (I swear I never discussed paper protoyping or the aspects of an interaction designer's job with her before then :-))

Her dog salon app includes more than 20 screens in 6x8 inch paper format, all representing different user interfaces, such as a dog status overview (Figure 1), or a selection of different dog collars and ribbons (Figure 2).

|

|

Figures 1-2: Dog status overview (left); Selection of dog collars and ribbons (right)

Figure 3 shows the iPad itself, set to home screen with the icon for the dog salon app at the top left. Here, Josi drew the front frame of the iPad on a piece of 8x11 inch paper, cut out the screen, and glued the frame to a clear plastic sheet. She then stapled the frame and sheet to another piece of 8x11 inch paper.

Figure 3: iPad prototype set to home screen

Josi later revealed that she had also made games for the Nintendo DS console in the same way, but that the iPad was better because you had more room to draw. On paper, that is, not on the real thing…

July 2, 2013: An Introduction to Milan Guenther's Book Intersection and to the Enterprise Design Framework It Presents

When I recently began reviewing Milan Guenther's book Intersection, my review quickly took on a life of its own, eventually getting so long I needed to curtail it somehow. One option was to shorten my introduction to the book. On the other hand, though, I felt that the long version might be interesting for other UI designers. I ultimately decided to publish the original version of my introduction, with only minor adaptations, as a UI Design Blink. Here it is!

When I was young, there were only three design directions I was aware of: fashion design, product or industrial design, and graphic design. Naïve as I was at the time, I understood the first to be responsible for fashionable clothes, the second for the design of cars, vacuum cleaners, Scandinavian furniture, and so on, and the third for book illustrations, stamps, and advertising. Much later, in my mid-40s, I encountered another design direction, which has since become my "home turf": User interface (UI) design – often disguised under such names as usability, human-computer / man-machine interaction, user-centered design, or even human factors. Fortunate to be able to attend conferences like CHI and Interact, I gradually became and felt like a member of this professional community. Our domain is, in short, the dialog between human users and computers, and we strive to make it as effective and efficient as possible. Some people, however, still believe that UI design is just about putting controls on a screen.

At more recent conferences, gurus like Don Norman pointed out that our field has matured. Criteria like satisfaction (see its inclusion in the ISO norms), joy of use, and a great user experience are now important – users take the availability of functionality and ease of use for granted. All of a sudden, a new design direction was born: Experience design. This change in orientation was, among other things, reflected in the renaming of SAP's usability group from "user productivity" to "user experience" (UX) in 2005. Hordes of "experience designers" came on the scene, numerous books on this topic were published, and debates about"experience" abounded at conferences.

It took quite a while for me to discover that the UI design community, with its focus on the design of user interfaces for software applications, is just a subset of design approaches that deal more generally with interactions between humans and technical systems, or artifacts. Contrary to what the name of the famous design consultancy "Cooper Interaction Design" (now "Cooper") suggests, many designers regard interaction design as different from UI design and label it "IxD" to indicate this. At various (mostly smaller) conferences, I came across interaction designers from art schools and universities who, by the way, were not at all interested in jobs for UI designers, who applied their own approaches to design and research – like the Research-Through-Design (RTD) direction in the USA (for example, Zimmermann, Forlizzi, Paulos), or Critical Design in the UK (for example, Dunne & Raby, Gaver). This difference is also reflected in the backgrounds of those designers. While UI designers often have a background in science and are trained in the psychology of perception, memory, thinking, and so on, interaction designers tend to come from art or design schools and often feel more like artists – think of the infamous stereotype of the "artist" or "genius" designer. Probably the last thing they would do is ask a user how something should be designed. Nevertheless, they do observe people interacting with their gadgets and ask them afterwards...

In their book The Design Way, Nelson and Stolterman suggest that we understand design as a "third approach" with methods of its own that is on par with art and science. They and other designers use terms like "design thinking," "thinking like a designer," or "designerly ways of working." For me, this promotion of the role of design in society is also reflected in a broadening of the scope of design by applying design methods beyond traditional realms. For example, the "Design Thinking" approach – driven by the design consultancy ideo and the Stanford and Potsdam d-schools – encourages designers to bring their methods into the business world – by either taking part in business processes themselves, or by training business people to use design methods (after Tim Brown). More and more designers, not only "Design Thinking" proponents, push this direction, often viewing themselves in the role of a social conscience, a moderator, or even an elite that guides others. I attended a presentation by Jonathan Kahn expressing this point of view at the Interaction 2012 conference in Dublin (Interaction Designers as Agents of Change) – and there are many more statements and books in this vein.

Milan

Guenther's new book Intersection is just one of these books, and

is devoted to perhaps one of the biggest challenges that designers can

face: The redesign of large corporations and other institutions, that is,

the transformation of enterprises (the author uses this "umbrella

term" for "companies, organizations, public services, and other

types of projects or endeavors") from an actual, unsatisfying state

into a future, desired state. According to Guenther, such a daunting endeavor

has to be addressed in a holistic manner in order not to lose direction

and get discouraged by the myriads of details. In his book, he therefore

tries to find a balance between dealing with complexity and maintaining

a holistic view. He proposes and delineates an "enterprise design

framework," which is comprised of 20 design aspects to be considered

in strategic design initiatives. He clusters them into five groups that

range from abstract concepts to concrete actions and are connected with

appropriate design disciplines that serve as "methodological backbones." And

that brings my introduction to the book full-circle: All in all, Guenther's

enterprise design framework brings 24 design disciplines into play – not

just three, the number with which I started my introduction. And, of course,

all of the directions that focus around my "home turf" are included

in one facet or another: human-centered design (or user-centered design),

a-kind-of UI design, experience design, interaction design, information

architecture, media design, a-kind-of visual design, and more.

Milan

Guenther's new book Intersection is just one of these books, and

is devoted to perhaps one of the biggest challenges that designers can

face: The redesign of large corporations and other institutions, that is,

the transformation of enterprises (the author uses this "umbrella

term" for "companies, organizations, public services, and other

types of projects or endeavors") from an actual, unsatisfying state

into a future, desired state. According to Guenther, such a daunting endeavor

has to be addressed in a holistic manner in order not to lose direction

and get discouraged by the myriads of details. In his book, he therefore

tries to find a balance between dealing with complexity and maintaining

a holistic view. He proposes and delineates an "enterprise design

framework," which is comprised of 20 design aspects to be considered

in strategic design initiatives. He clusters them into five groups that

range from abstract concepts to concrete actions and are connected with

appropriate design disciplines that serve as "methodological backbones." And

that brings my introduction to the book full-circle: All in all, Guenther's

enterprise design framework brings 24 design disciplines into play – not

just three, the number with which I started my introduction. And, of course,

all of the directions that focus around my "home turf" are included

in one facet or another: human-centered design (or user-centered design),

a-kind-of UI design, experience design, interaction design, information

architecture, media design, a-kind-of visual design, and more.

So, if I was able to whet your appetite for more, have a look at my review of Guenther's book Intersection.

References

- Milan Guenther (2012). Intersection: How Enterprise Design Bridges the Gap between Business, Technology, and People. Morgan Kaufmann • ISBN-10: 0123884357, ISBN-13: 978-0123884350 • Review

- Book Website: intersectionbook.com • Homepage Milan Guenther: www.guenther.cx • eda.c (Milan Guenther's design consultancy)

- Harold G. Nelson & Erik Stolterman (2012). The Design Way: Intentional Change in an Unpredictable World (2nd Ed.). The MIT Press • ISBN-10: 0262018179, ISBN-13: 978-0262018173 • Review

June 18, 2013: About the Book Gamification at Work

Gamification is becoming a common buzzwords in business these days* – and in UI design as well. I first encountered the concept of gamification in more detail at the Interaction 2012 conference in Dublin, Ireland. There, Dustin DiTommaso held the presentation Beyond Gamification: Architecting Engagement Through Game Design Thinking, in which he discussed self-determination theory and laid out a seven-step "framework for success" in gameful design. Regrettably, his presentation was not the critical examination of this topic that I had hoped for. Nevertheless, it inspired me to start writing an introductory article about gamification for the SAP Design Guild – but somehow I never managed to get beyond a first collection of ideas...

Figure 1: A progress indicator – a standard element of "game mechanics" (from the book)

While collecting materials for the article, I learned about points, badges, and leaderboards, but admittedly, the older I get, the less interested I am in competing with other people – including myself – so I have remained a gamification skeptic. Other people think differently and positively about this approach, though. Take, for example my SAP colleagues Janaki Kumar and Mario Herger. Just recently, they finished their book Gamification at Work – Designing Engaging Business Software, which will be published by the Interaction Design Foundation (IDF) shortly. In their book, they repeatedly point out that there is more to gamification of business applications than "simply adding game mechanics such as points, badges, and leaderboards to their applications and calling them gamified." Like DiTommaso, the authors provide a framework for success, this time for the gamification of business applications. Oriented at the User-Centered Design approach, their Player Centered Design framework consists of the following five steps:

- Know your player

- Identify the mission

- Understand human motivation

- Apply [game] mechanics

- Manage, monitor, and measure

Rikke Friis Dam of the IDF wrote already a sneak preview of the book for the SAP UX Community. In this UI Design Blink, I would also like to point readers to the new book – without giving away too much information. Chapter 1, Mixing Work and Play, seems to have been written specifically for me, a gamification skeptic. After "busting" some myths about gamification, the authors surprise their readers with the statement that they (the readers) have already been gamified. I was amazed to learn that some well-known UI controls and principles, such as progress bars and feedback, are actually standard elements of "game mechanics." Thus, the annoying but nonetheless useful progress bar telling me that copying my user data from my old computer to my new one will take about 50 hours (a true example!) is just an attempt to gamify the situation and make it more fun. But perhaps I got it all wrong, because in the authors' example it's a little bit different: It's not the system performance that is reported back, it's the user's progress in doing something, such as completing his or her LinkedIn profile. I would like to add one more remark. In Chapter 8, Legal and Ethical Considerations, the authors point to possible legal and ethical consequences of gamifying business applications. The first aspect had not even crossed my mind yet, but, as the authors explain, both have to be taken seriously.

Hopefully, I have now whetted the readers' appetite to rush to the preview of the book on the IDF Website – or even to the book itself as soon as it has been published officially.

*) This statement was taken from the book.

References

- Janaki Mythily Kumar & Mario Herger (2013). Gamification at Work: Designing Engaging Business Software. Interaction Design Foundation • ISBN-10: 8792964079, ISBN-13: 978-8792964076

- Gamification at Work (Interaction Design Foundation)

June 11, 2013: A Few Books and Links for Familiarizing Oneself with Charts and Dashboards (Data Visualization)

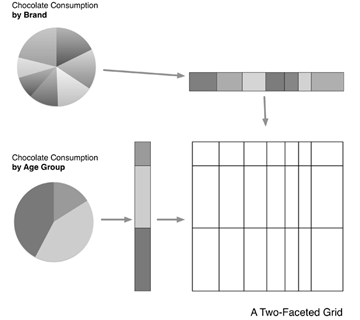

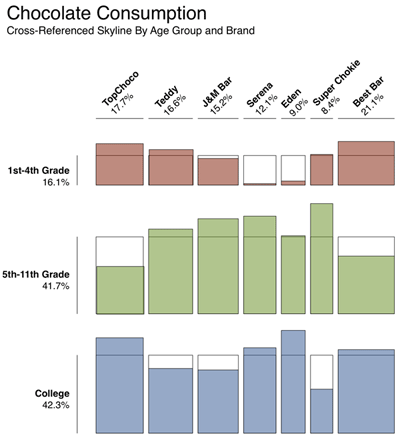

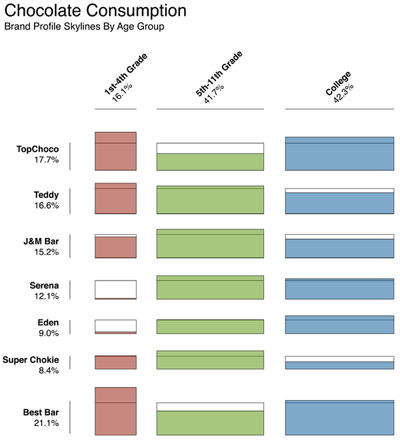

The design of charts and dashboards is not usually included in books about information visualization and is covered separately. Sometimes, this field is referred to as "data visualization." Therefore, I'm following this habit and am presenting the books around this topic that I came across in a separate UI Design Blink.

Charts